Running Generic Containerized Workload

You can run any containerized workload in Theta EdgeCloud. The workload can be a custom AI model you trained, a financial data analysis job, a scientific or mathematical simulation or any computational task that can be containerized. To do this, you will first create a custom template for your container, and then launch the task from that template.

Create a Custom Template for Your Containerized Workload

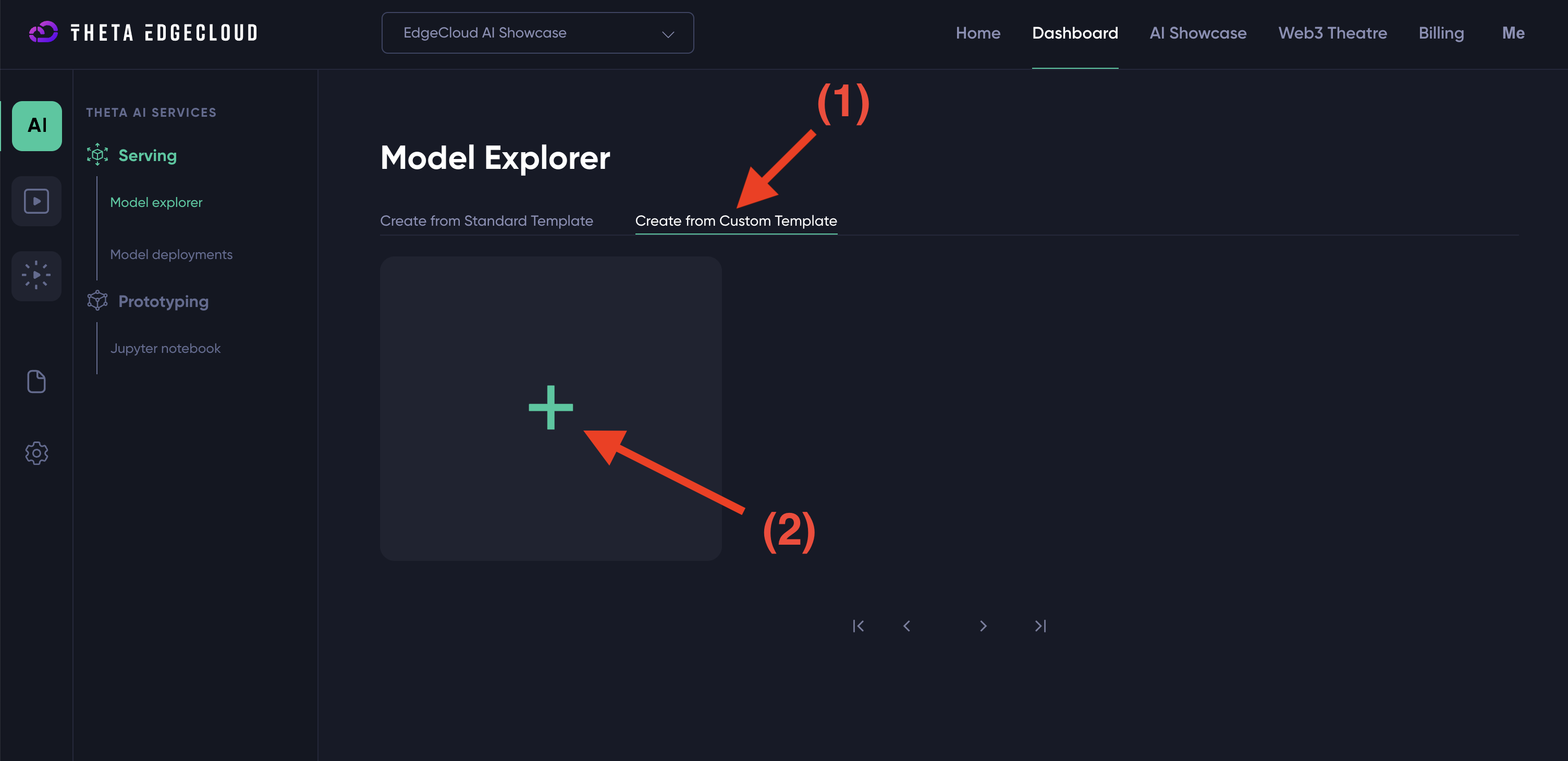

To run the workload in Theta EdgeCloud, first navigate to the "Model Explorer" page, which can be assessed by simply clicking on the "AI" icon on the left bar. On this page, click on "Create from Custom Template" tab as pointed by the red arrow (1) in the screenshot below. Next, click the "+" sign to a new custom template shown by arrow (2).

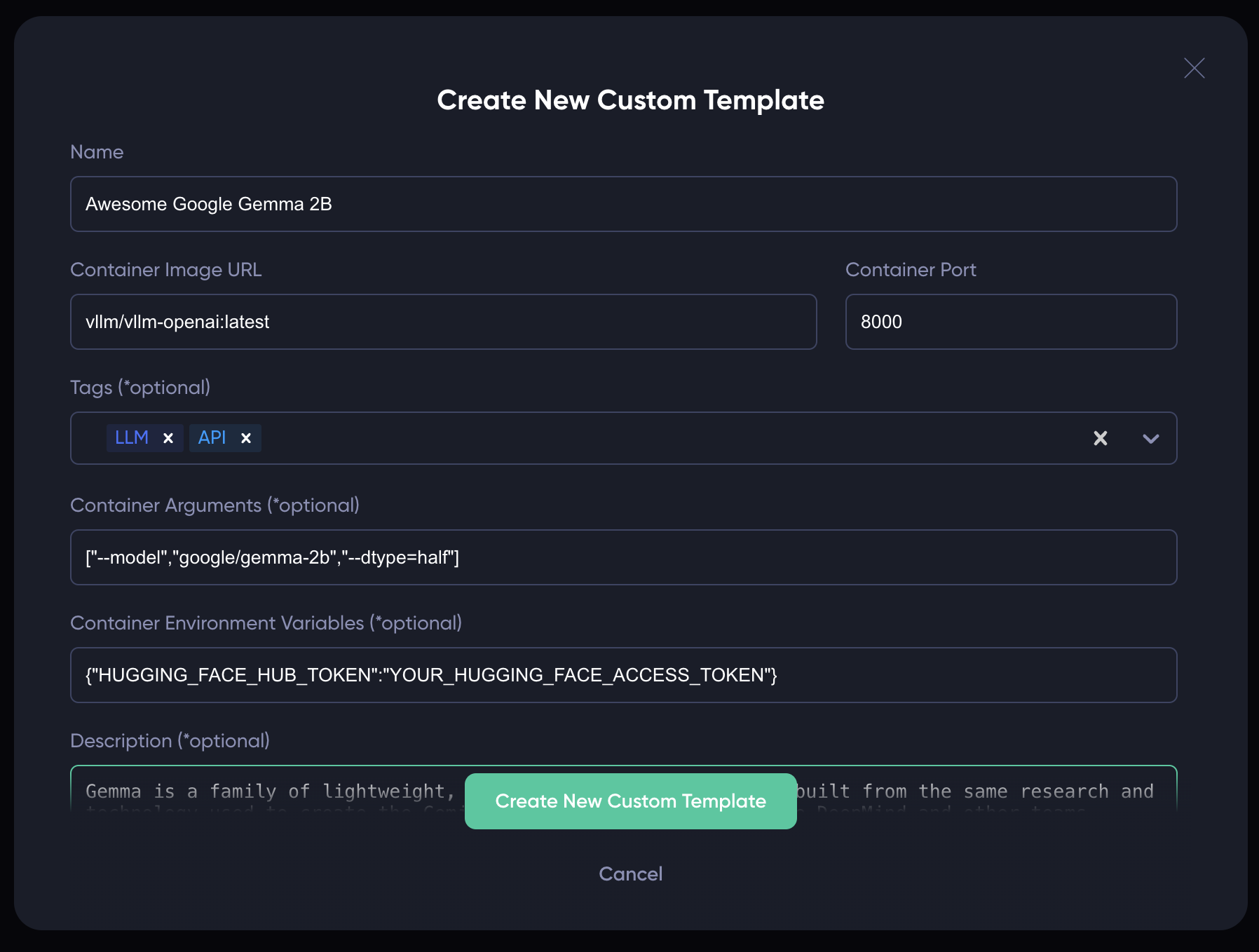

You should now see the "Create New Custom Template" modal. Most of the fields are self-explanatory. In particular:

Container Image URL: Please fill in the URL of the container image for your workload. For example, if you want to host the latest vLLM image (https://hub.docker.com/r/vllm/vllm-openai), please fill invllm/vllm-openai:latest(the https://hub.docker.com/r prefix is not needed).Container Port: For example, if you run a web server inside the container at port 8000, please fill in 8000 for the field. It will be mapped to the 443 HTTPS port in the actual deployment. In the future, we may support multiple ports and port mapping.Container Argument: This field is optional. It is used to set the arguments you'd like to pass to the container. In the example shown in the screenshot below, we are trying to host the Google Gemma-2B model using vLLM. For this setup, we need to supply the container with argument["--model","google/gemma-2b","--dtype=half"].Container Environment Variables: This is also an optional field. It is used to set the environment variables to run the container with. In our example, Gemma 2B requires your HuggingFace access token, so we need to provide that with env variable{"HUGGING_FACE_HUB_TOKEN":"YOUR_HUGGING_FACE_ACCESS_TOKEN"}whereYOUR_HUGGING_FACE_ACCESS_TOKENcan be obtained following this guide. Note that many LLMs such as Llama, Gemma, and Mistral require you to sign a license agreement before using the model. You can find the license agreement on the model's HuggingFace model card page. For example, Gemma 2B's license agreement is available here.

Now that you have fill out the form, simply click on the "Create New Custom Template" button to save the template for later use. After creation, you should see a custom template on the page like this:

Run the Workload in Theta EdgeCloud

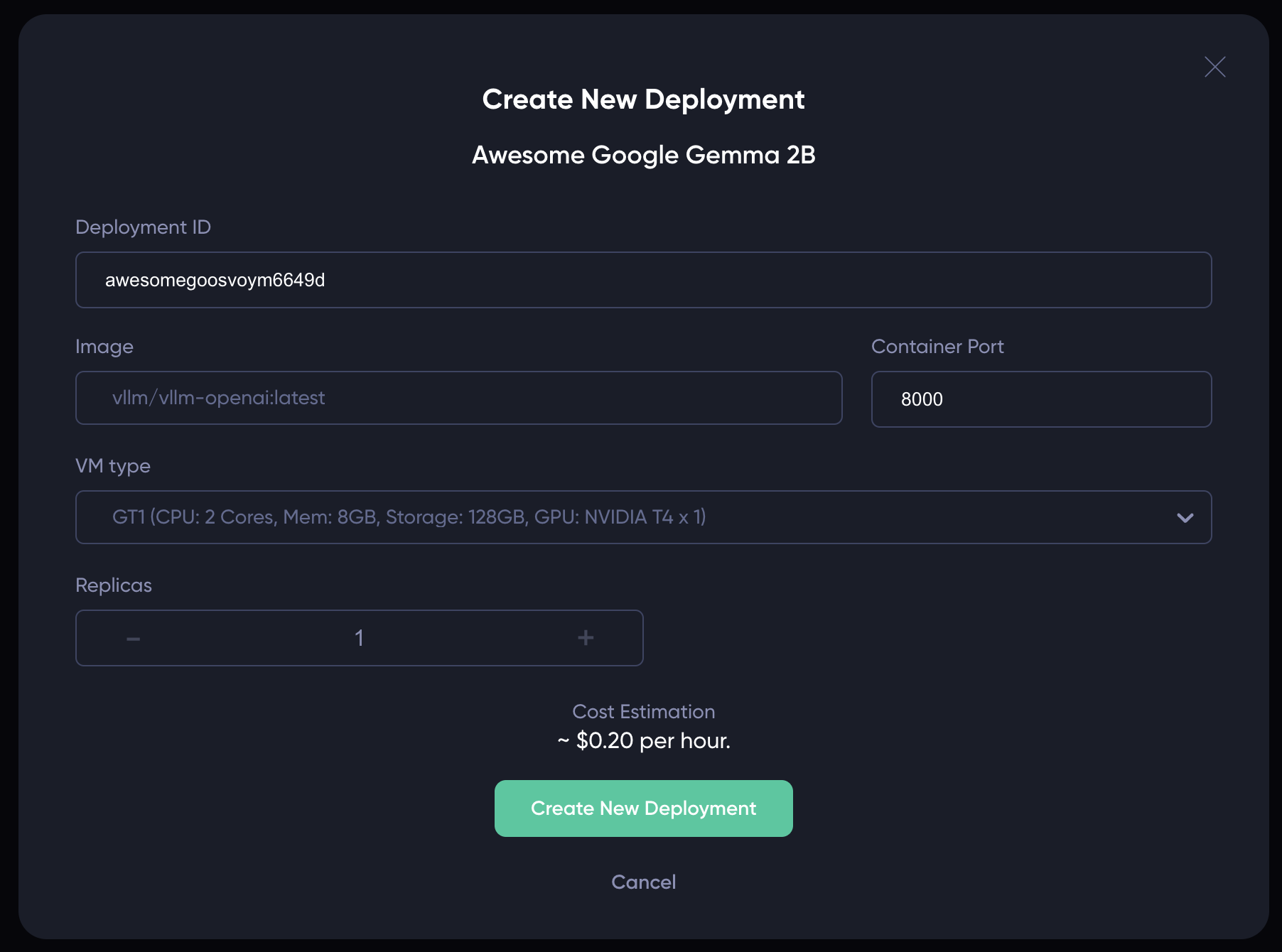

To run the workload, simply click on the custom template you just created, and you should see a modal popup similar to the following. Choose the proper VM type and replica count, and then click on "Create New Deployment" to deploy the containerized workload to the EdgeCloud.

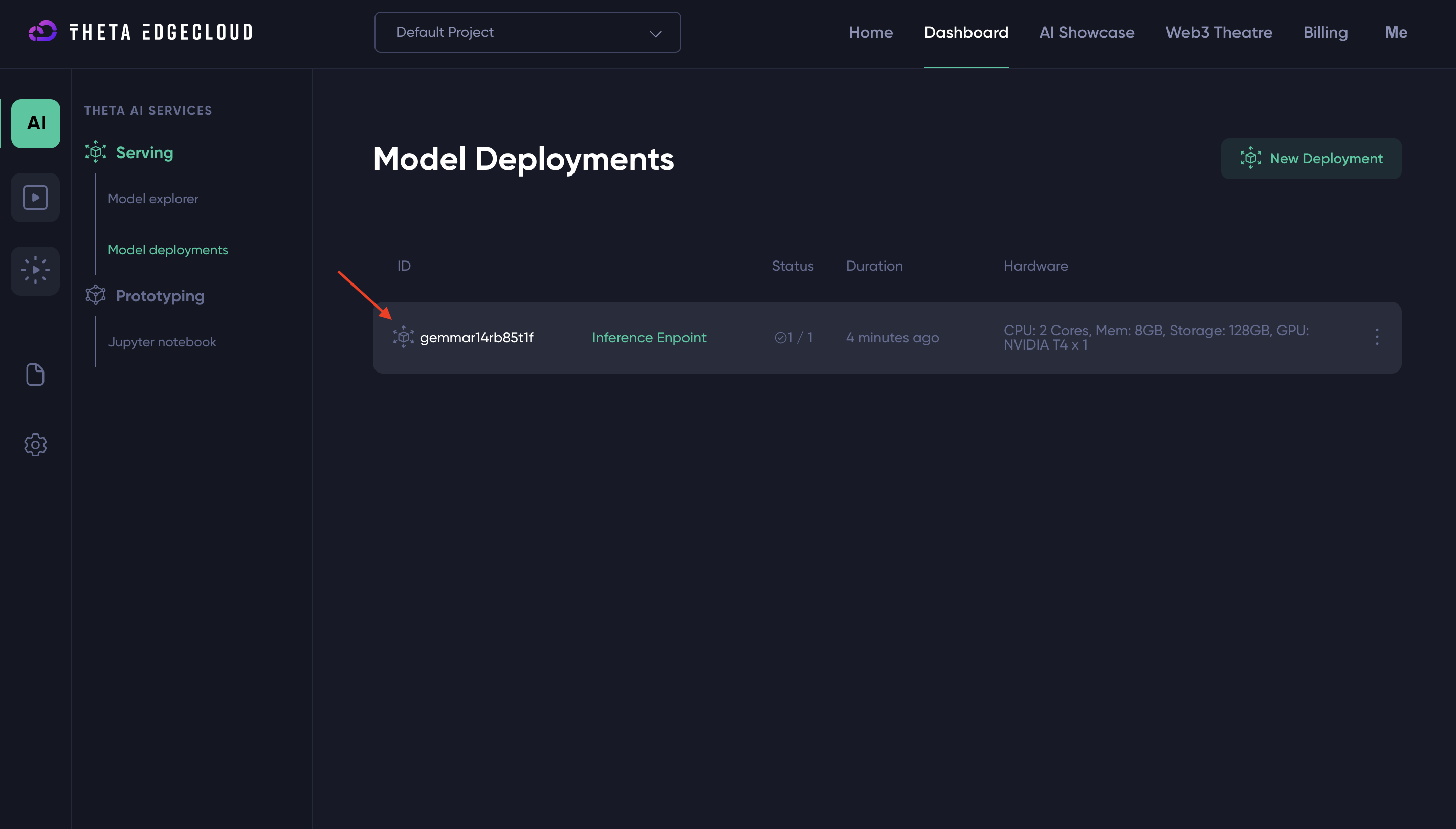

If the deployment succeeded, you should see a new row in the "Model Deployments" page. Once the "Inference Endpoint" turns green, you can click on it to access your web service endpoint! You can also click on the row to see the deployment details, same as those deployed from a standard template.

Update a Custom Deployment Template

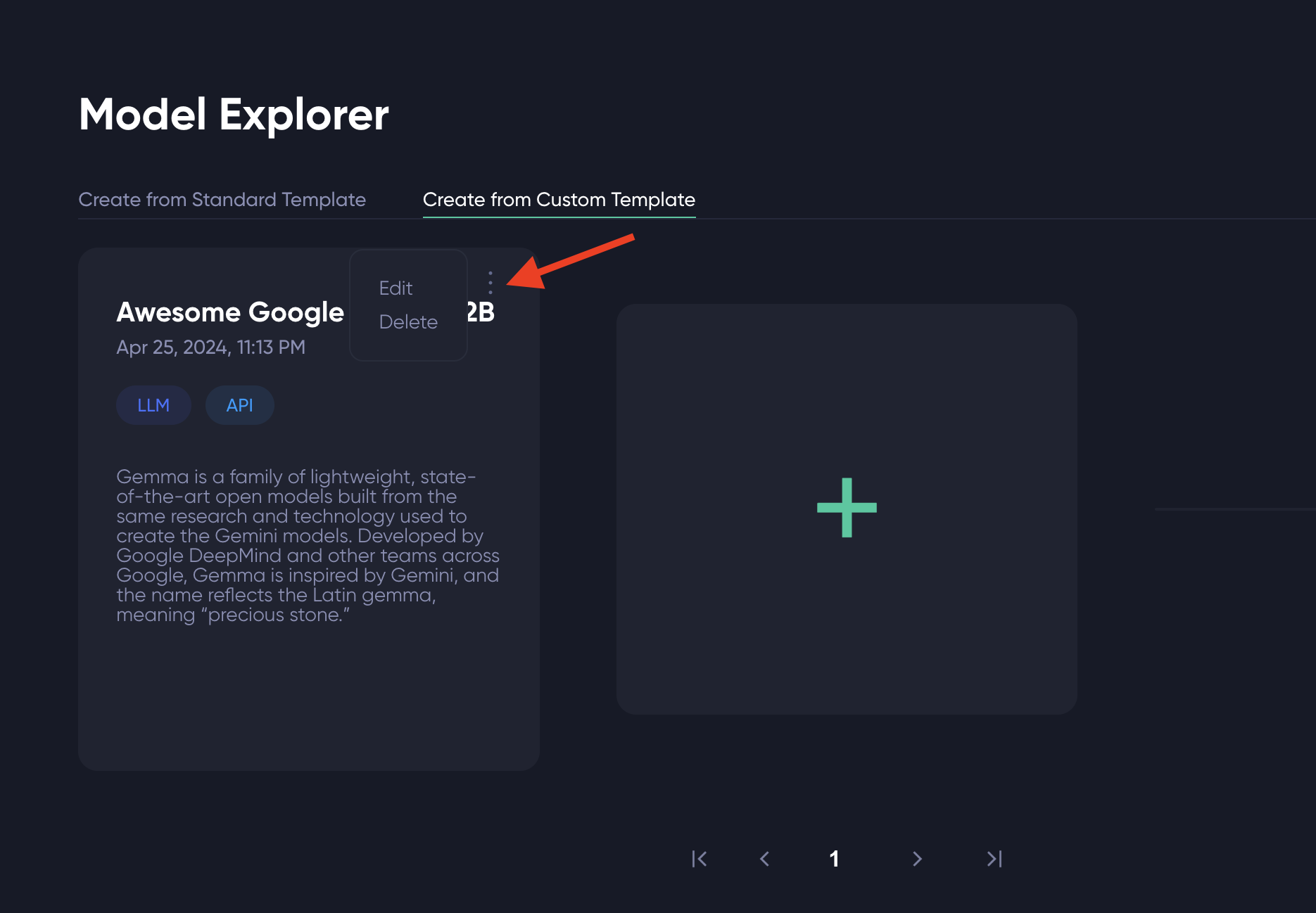

If you want to update a custom deployment template you created, simply click on the "three dots" icon on the top-right corner of the template. Then, click "Edit" to update the template, or "Delete" to delete the template.

Updated 4 months ago