On-demand Model Inference APIs

Serverless AI development powered by Theta EdgeCloud

Our new On-demand AI Model Inference API Service enables serverless development for building and scaling intelligent applications. With this feature, you can now access powerful AI models — ranging from cutting-edge LLMs to GenAI models — instantly via a simple API, without the hassle of provisioning or managing servers. Whether you're prototyping a smart assistant or deploying a production-grade AI solution, our pay-as-you-go model ensures you only pay for what you use, while the Theta EdgeCloud platform handles the rest. It's the fastest way to bring AI into your apps — no infrastructure, no overhead, just plain simple API integration.

In the backend, these AI models run on Theta EdgeCloud’s hybrid cloud-edge infrastructure , which dynamically sources idle GPU capacity from cloud providers, enterprise data centers, and community-operated edge nodes. Based on the realtime demand for model inference, an intelligent scheduler continuously optimizes model placement and resource allocation, ensuring low latency, high availability, and cost efficiency — even during peak hours. This distributed architecture allows the platform to scale seamlessly while minimizing waste and delivering consistent performance for both development and production workloads.

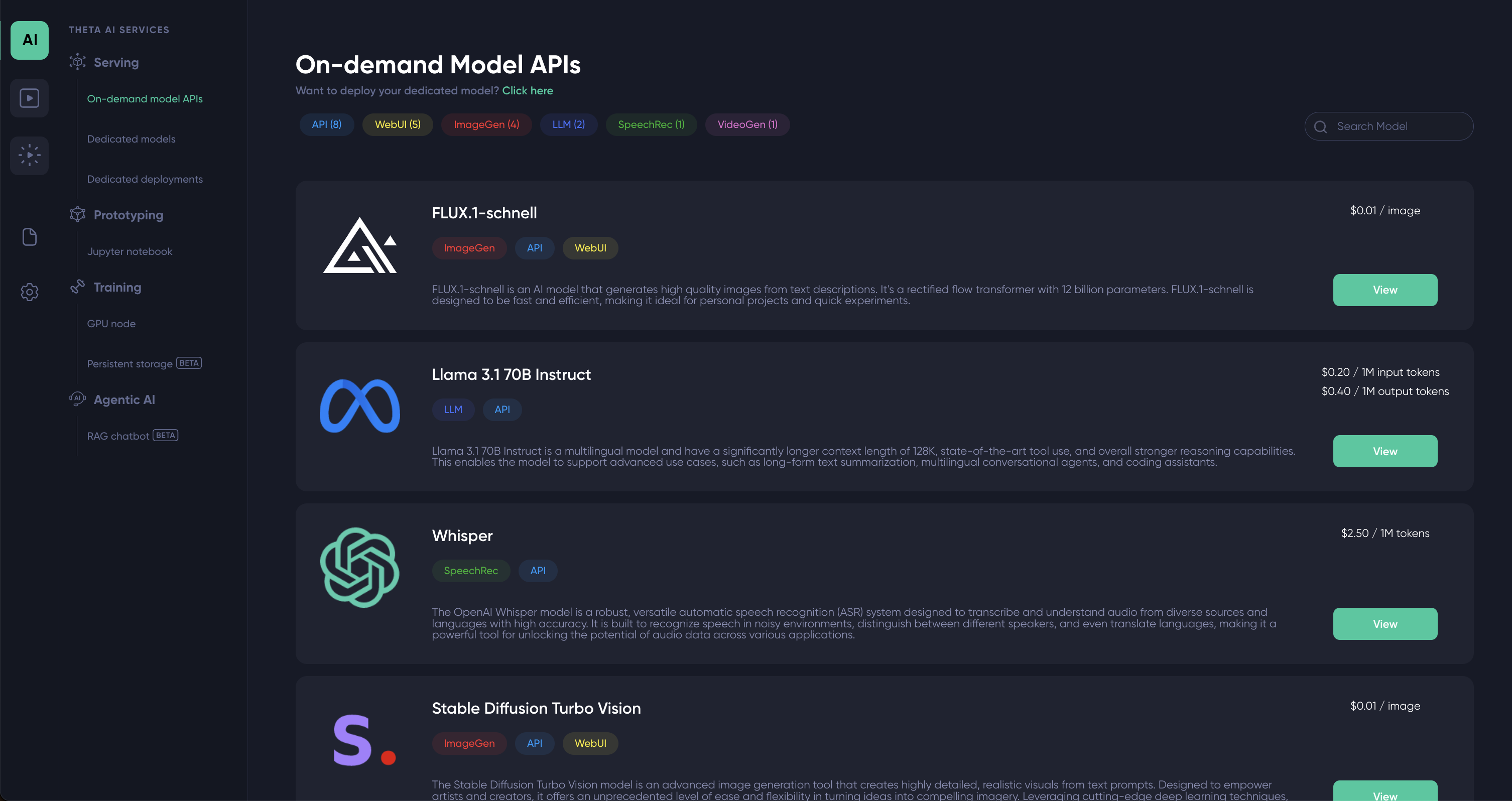

This document will guide you through using the On-Demand Model API service, available HERE. The link will take you to a dashboard similar to the one shown below, featuring a curated list of cutting-edge generative AI models, including Flux, Llama 3.1, Whisper, and Stable Diffusion.

To use the model, click the green "View" button. You can also find the API pricing details displayed just above the "View" button.

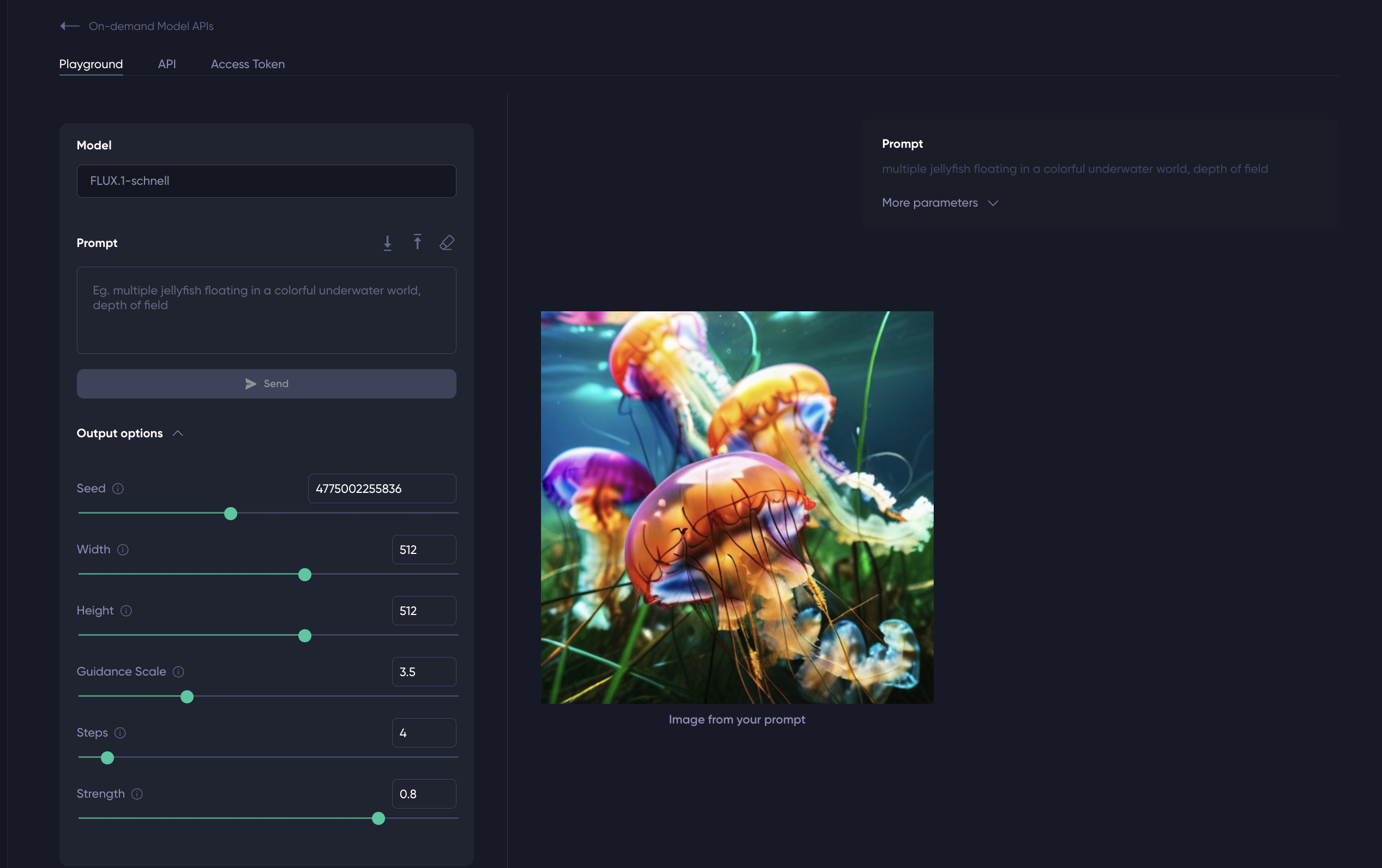

Using FLUX.1-schnell as an example, after clicking the “View” button, you’ll be taken to a page with three tabs. The first tab, "Playground", allows you to interact with the model directly through the UI. For instance, the Playground for FLUX.1-schnell accepts a text prompt along with generation parameters such as image resolution, and generates an image based on your input when you click the "Send" button.

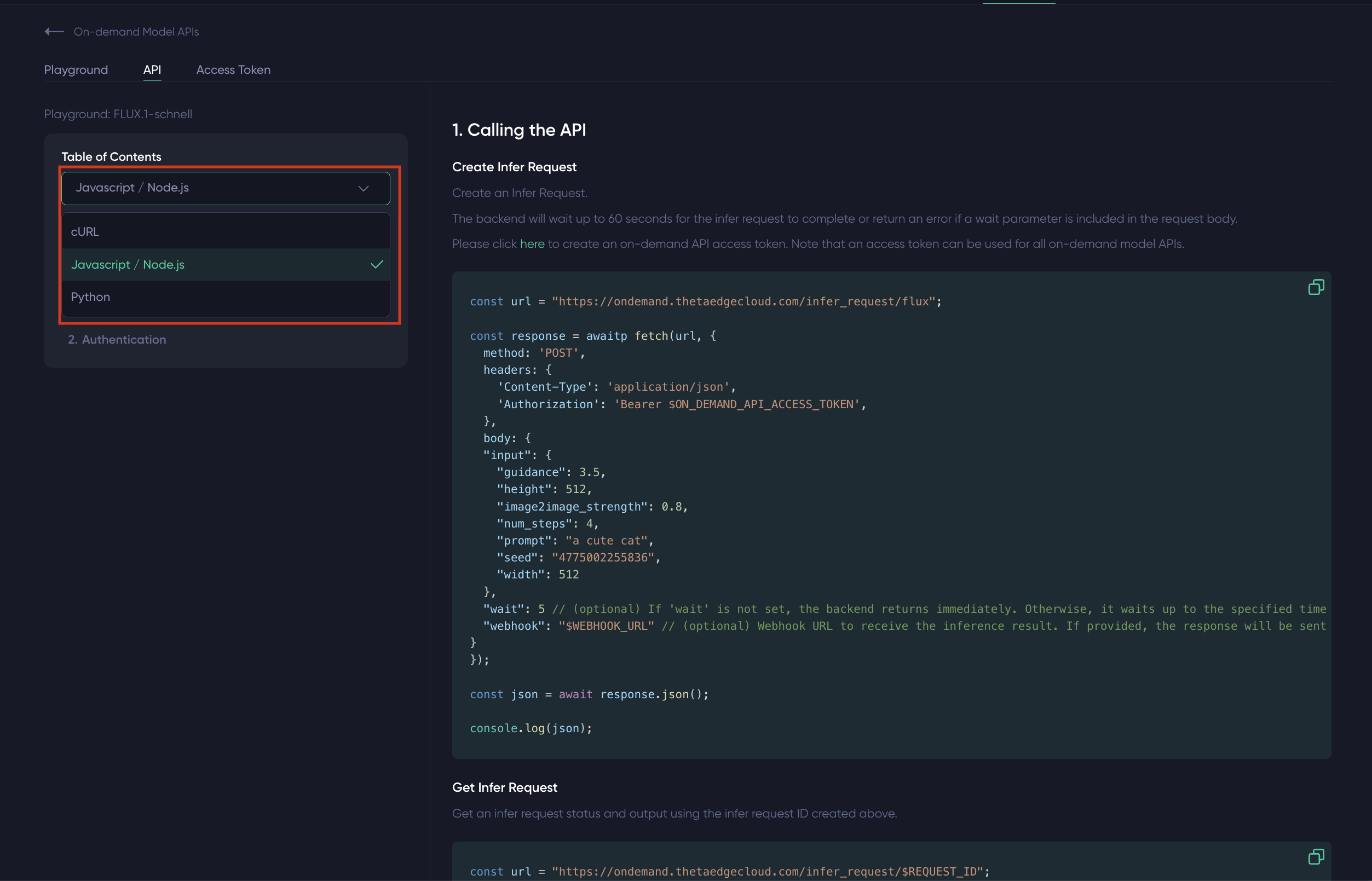

The second tab is particularly useful for developers, as it provides detailed API documentation for the currently selected model. It includes API specifications and example code snippets in multiple languages, such as cURL, JavaScript / Node.js, and Python. You can select your preferred language from the dropdown menu highlighted in the red box.

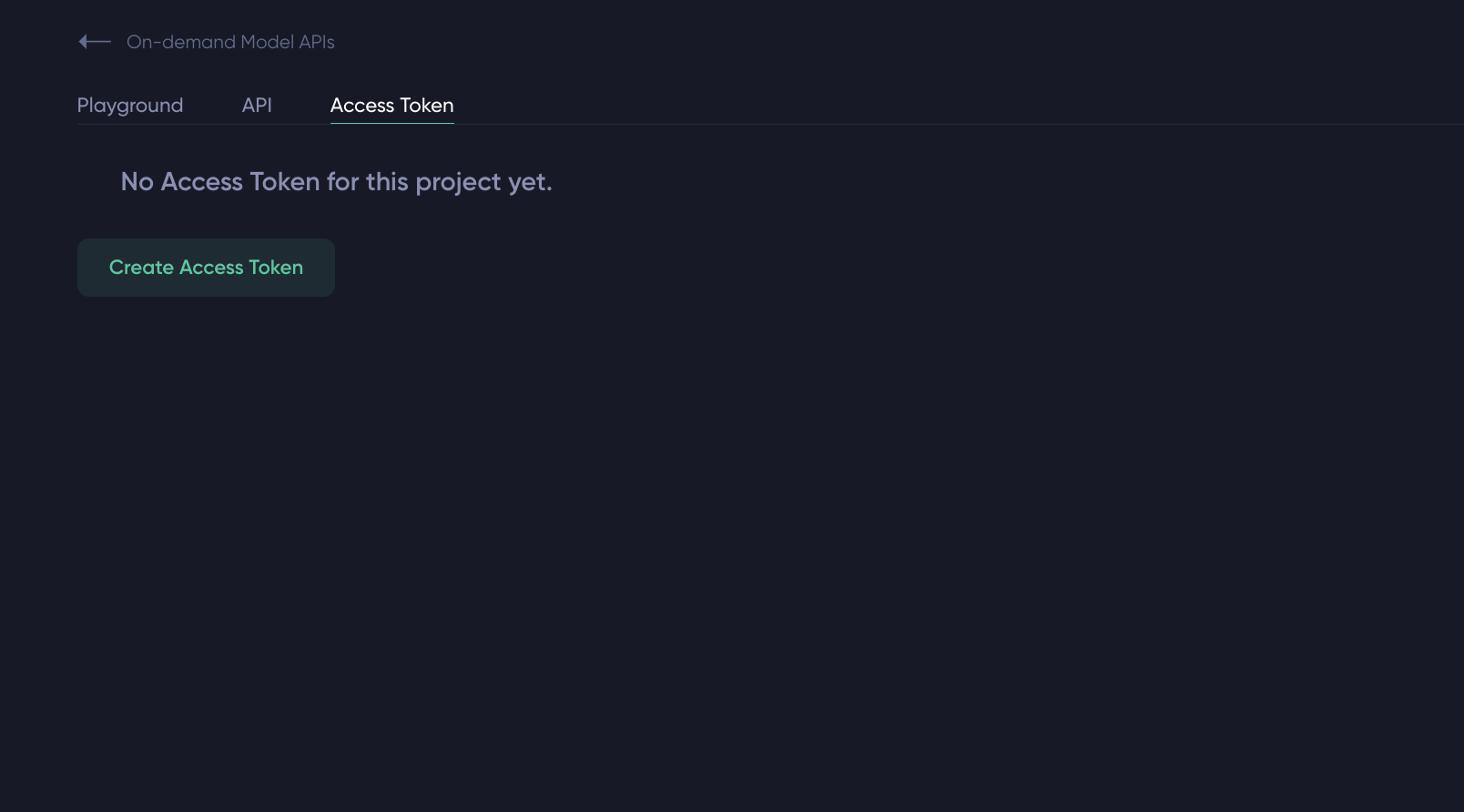

Accessing the model APIs requires an access key, which you can manage in the third tab. You can generate as many access keys as needed, and each one can be renamed, updated, or deleted at any time. Note that these access tokens are valid across all model APIs.

While the on-demand AI model APIs are handy for serverless development, there are times where the developers might want dedicated model deployments to optimize performance and latency. For this please check out our guides for Dedicated AI Model Serving on Theta EdgeCloud.

Updated 4 months ago