Run Your Tasks on Community EdgeCloud Nodes

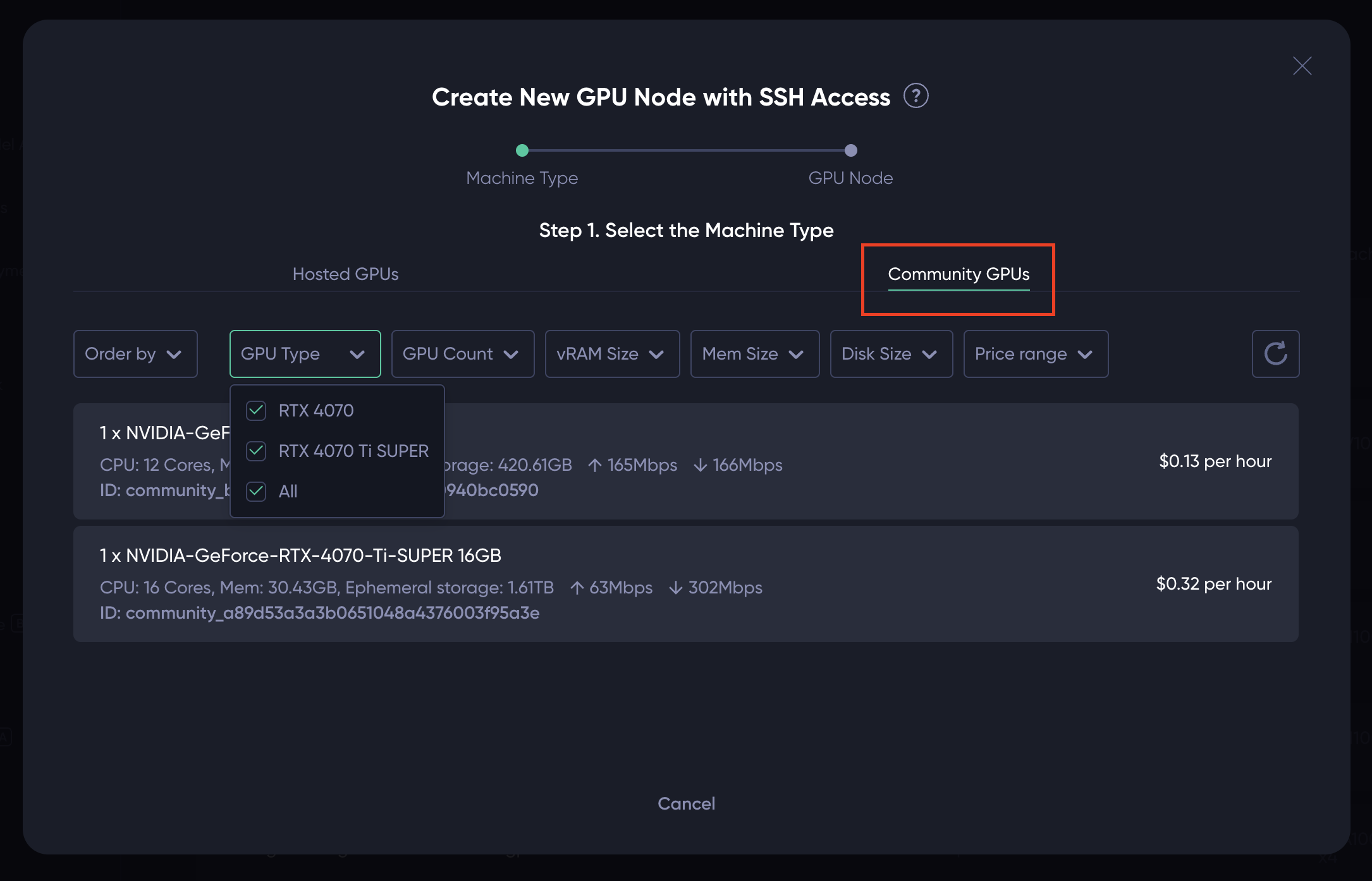

1. Use Community Nodes as GPU Machines with SSH Access

You can use a community node as a GPU machine with SSH access. Simply navigate to the GPU Node feature page, click the "New GPU Node button", and select the "Community GPUs" tab. You should then see a UI similar to the following, which allows to select a community node and the configure it as a GPU machine with SSH access. The UI also allows you to order the list by price, vRAM size, etc., and filter the GPU machines by GPU type, vRAM size, and other metrics.

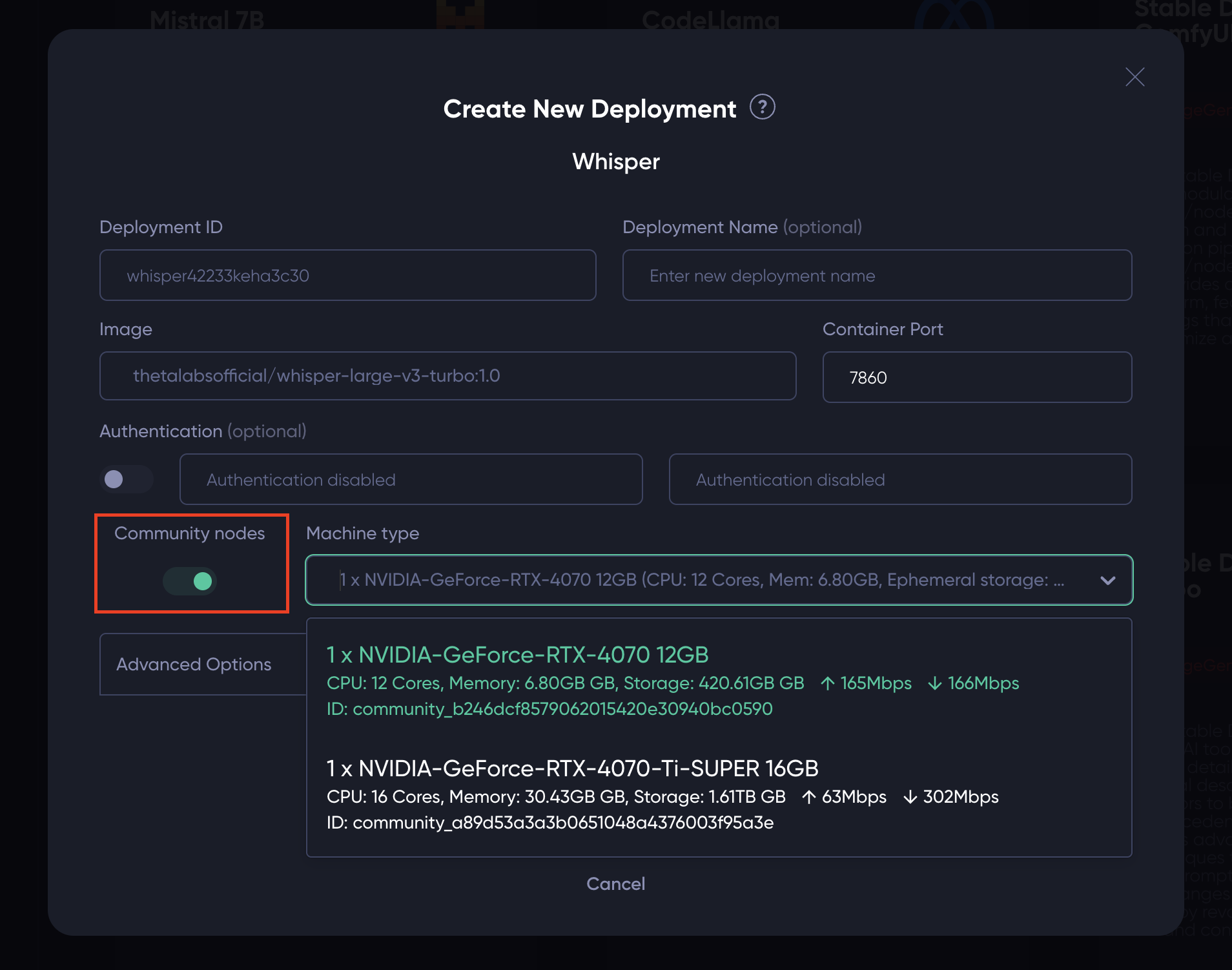

2. Dedicated Model Deployment Onto Community Nodes

You can use a community node to host a dedicated AI model. Just navigate to the Dedicated Model Launchpad page, click on the model you want to launch with. Then on the pop-up modal, switch on the "Community Nodes", and select the community machine you want to deploy to from the drop-down list.

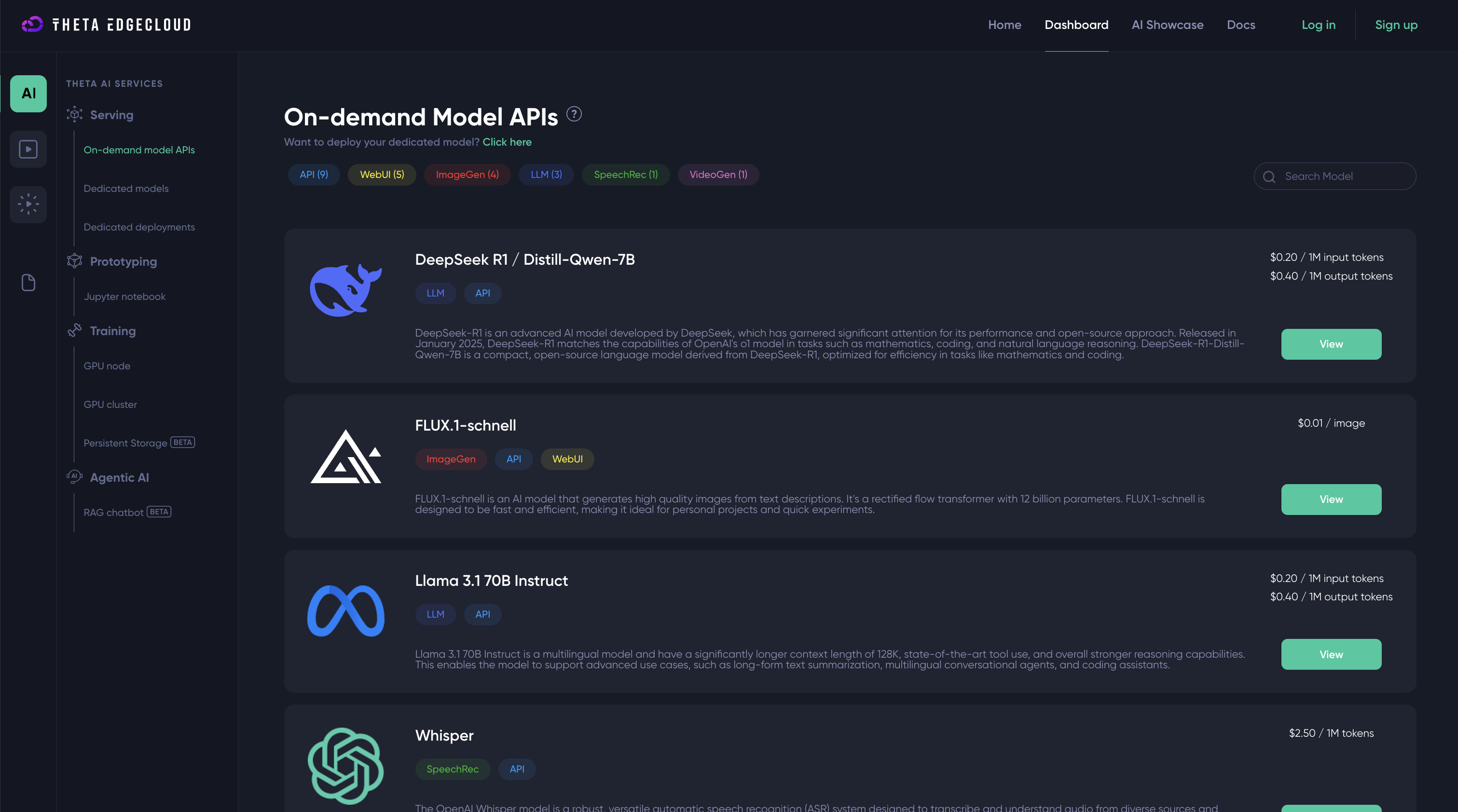

3. On-demand Model APIs Leveraging Community Nodes

The EdgeCloud on-demand model API product by default runs a portion of its inference jobs on community-operated nodes behind the scenes. Each time you use one of the model APIs, there’s a good chance that a community node is executing the corresponding inference task and returning the result to you.

Updated 4 months ago