Agentic Tools for the RAG Chatbot

1. Overview

The tool-use capability of large language models (LLMs) extends their functionality far beyond static text generation, enabling them to interact dynamically with external systems, APIs, databases, and software tools. By leveraging these tools, LLMs can perform real-time data retrieval, code execution, complex calculations, image generation, and more. This unlocks powerful possibilities such as autonomous agents that can reason and act in the real world, AI assistants that can build applications or analyze data on the fly, and domain-specific systems that combine language understanding with external knowledge and computation—paving the way for more capable, adaptable, and interactive AI systems and agent-driven AI workflows.

Theta EdgeCloud has released the "Agentic Tools" feature for its RAG chatbot product, marking a major step forward in agent-driven AI workflows. With Agentic Tools, developers can now equip their chatbots with external capabilities such as web search, API calls, code execution, and document manipulation — enabling the bots to reason, plan, and act beyond static retrieval. This opens the door to more intelligent, goal-directed assistants that can automate complex tasks, integrate with enterprise systems, and dynamically adapt to user needs. For developers, it means faster prototyping, more powerful applications, and a seamless path from natural language to real-world action.

The initial release of the Agentic Tools feature supports user-defined Custom Tools, enabling developers to specify external API endpoints, define when these APIs should be invoked, and configure the parameters to be used — all based on the chatbot's conversation context. In addition to custom tools, the release includes several powerful built-in tools such as Web Search and Text-to-SQL, which offer immediate utility for a wide range of applications. This document provides an overview of these new capabilities and how to leverage them for building more dynamic, context-aware AI agents.

2. Custom Tools

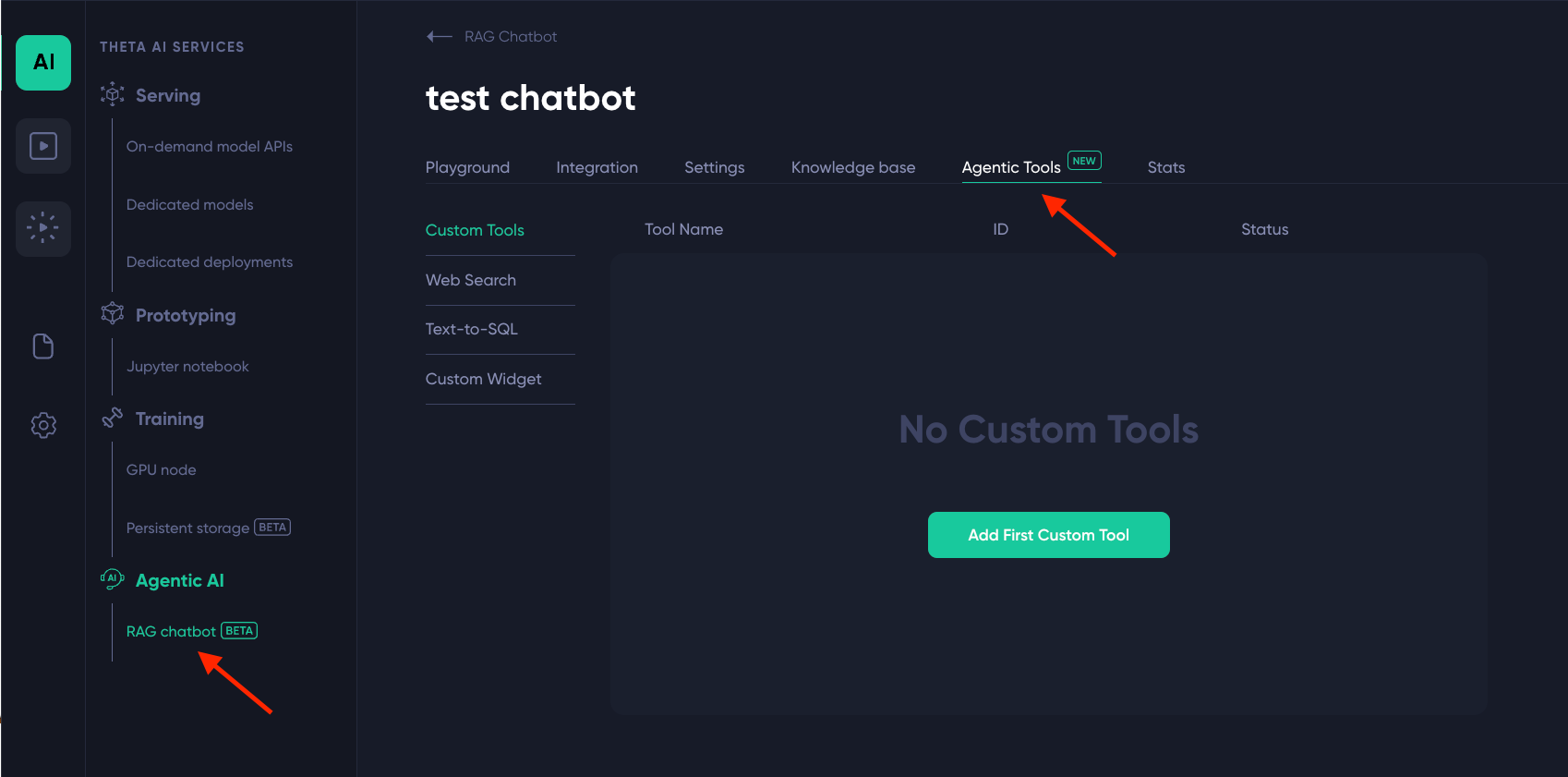

To set up the Agentic Tools for a RAG chatbot you have created, please navigate to the details page of the chatbot, and click on the “Agentic Tools” tab. Here you will be able to add either custom tools, and use built-in tools such as Web Search or Text-to-SQL. Below we provide a few examples of how to configure custom tools that access external resources via GET, POST, and PUT requests.

2.1 GET Example

As an example, we can use BlueSky’s public API as a custom tool to look up trending topics in BlueSky:

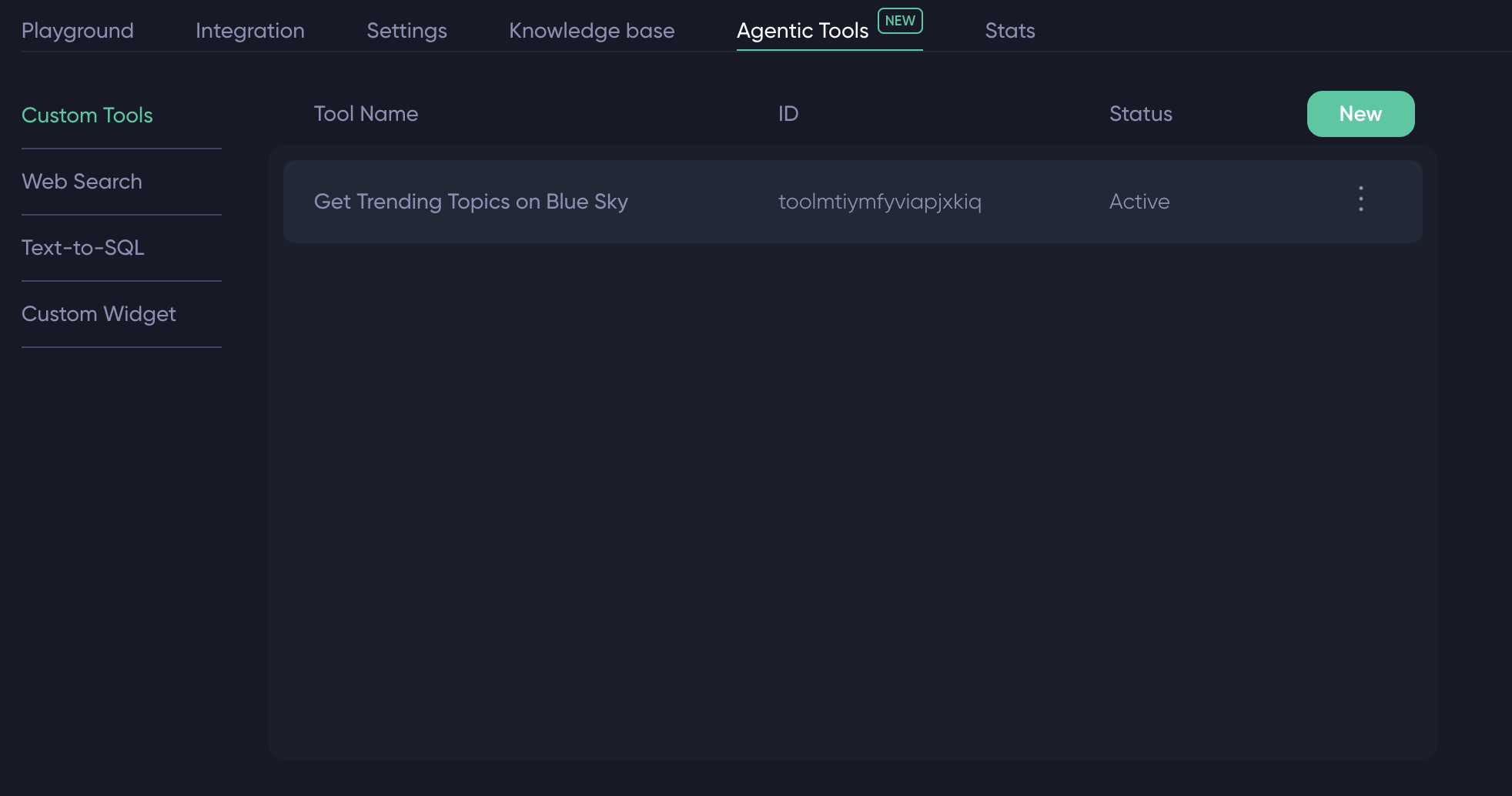

- First click the “New” button which will pop up a modal for you to configure the custom tool

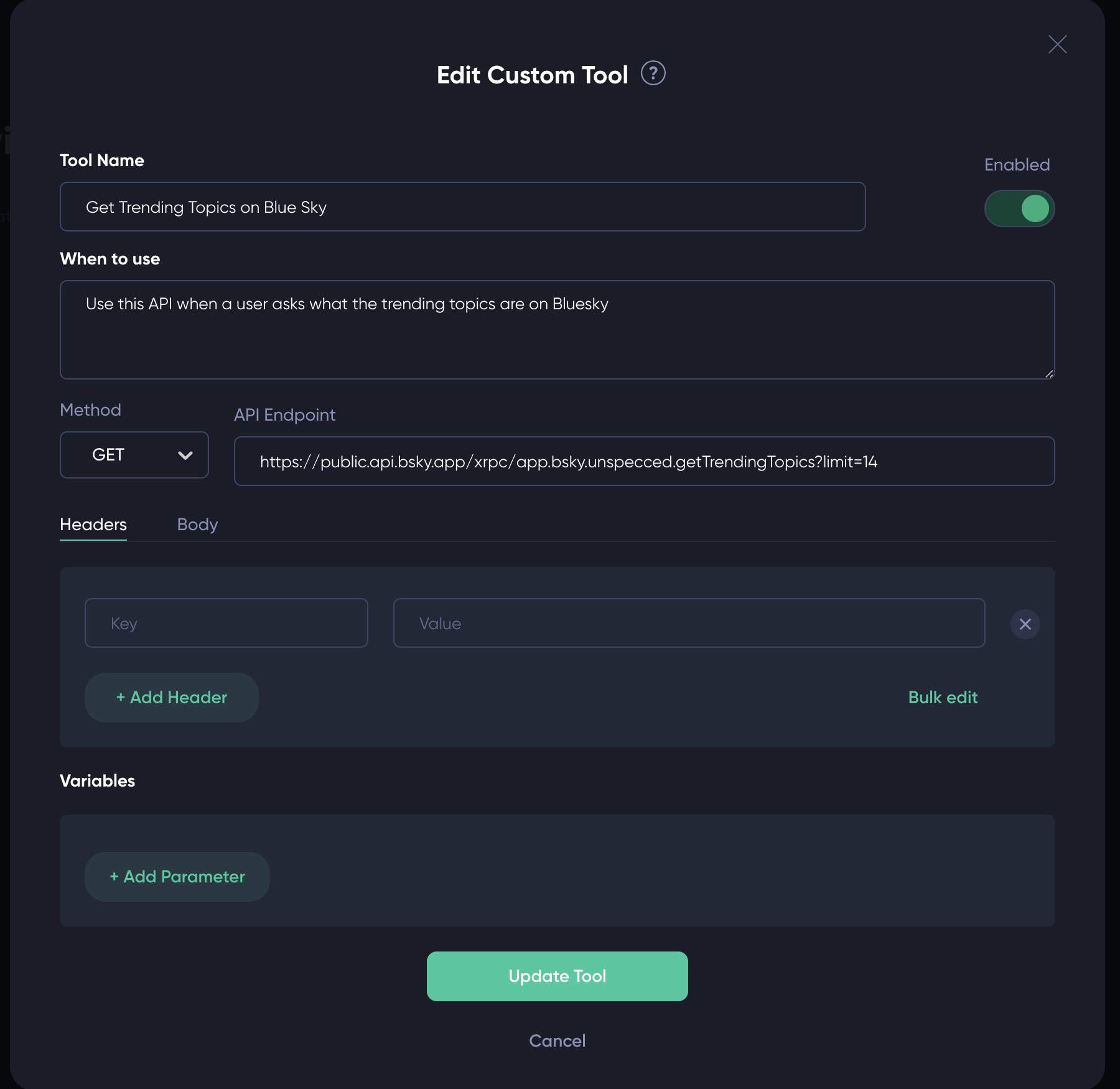

- In the “When to use” field, describe the conditions for the chatbot to call the API endpoint (see below) in natural language

- Select “GET” for the method, since in this example, we only need to retrieve the trending topics on Bluesky

- For the “API Endpoint" field, put in the Bluesky API endpoint for trending topics query

Save the settings

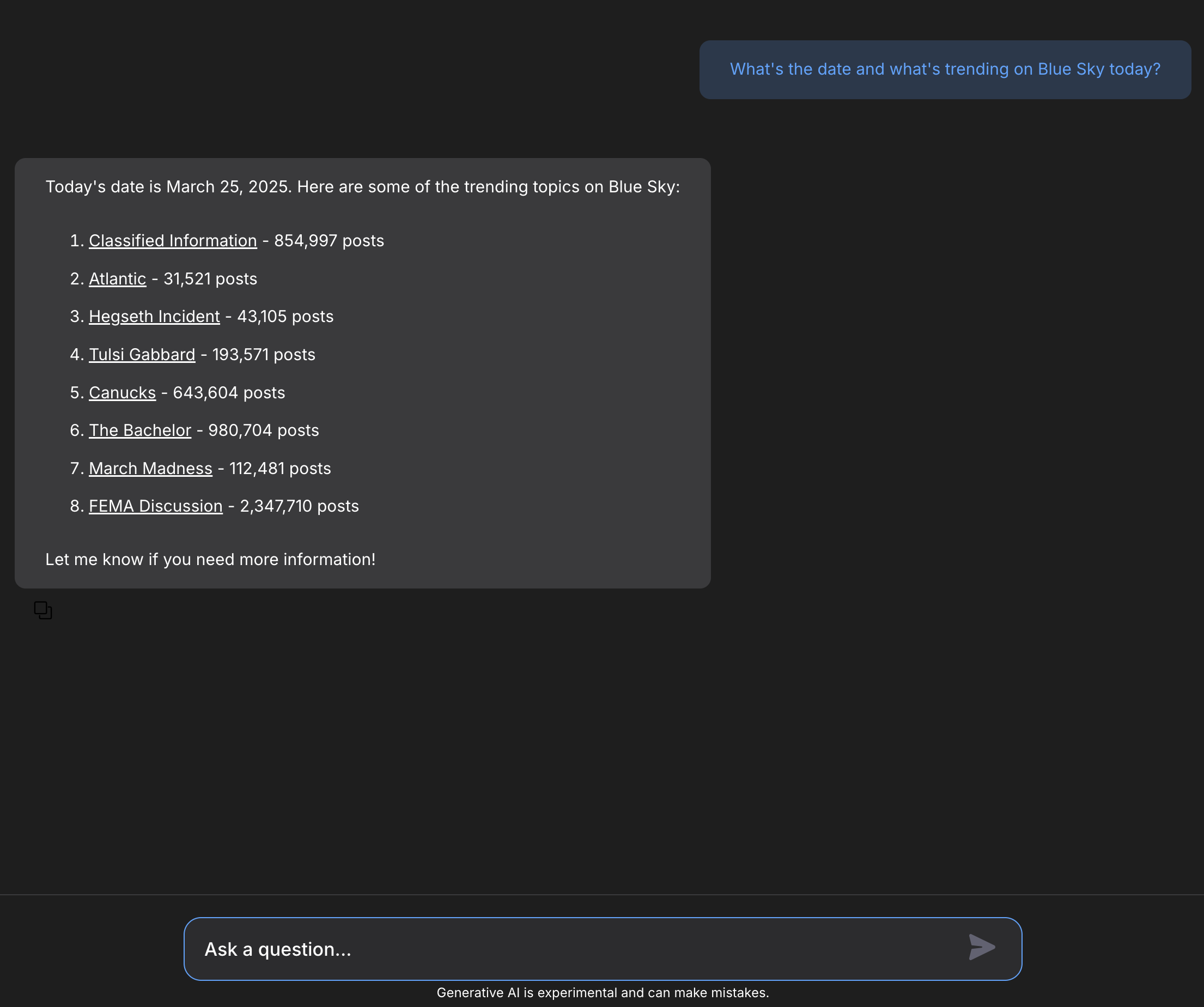

Now, in the chat interface, ask what’s trending on Bluesky, and the chatbot will call the Bluesky API and then summarize the trending topics for you! It even provides links for each topic so you can simply click and open the link.

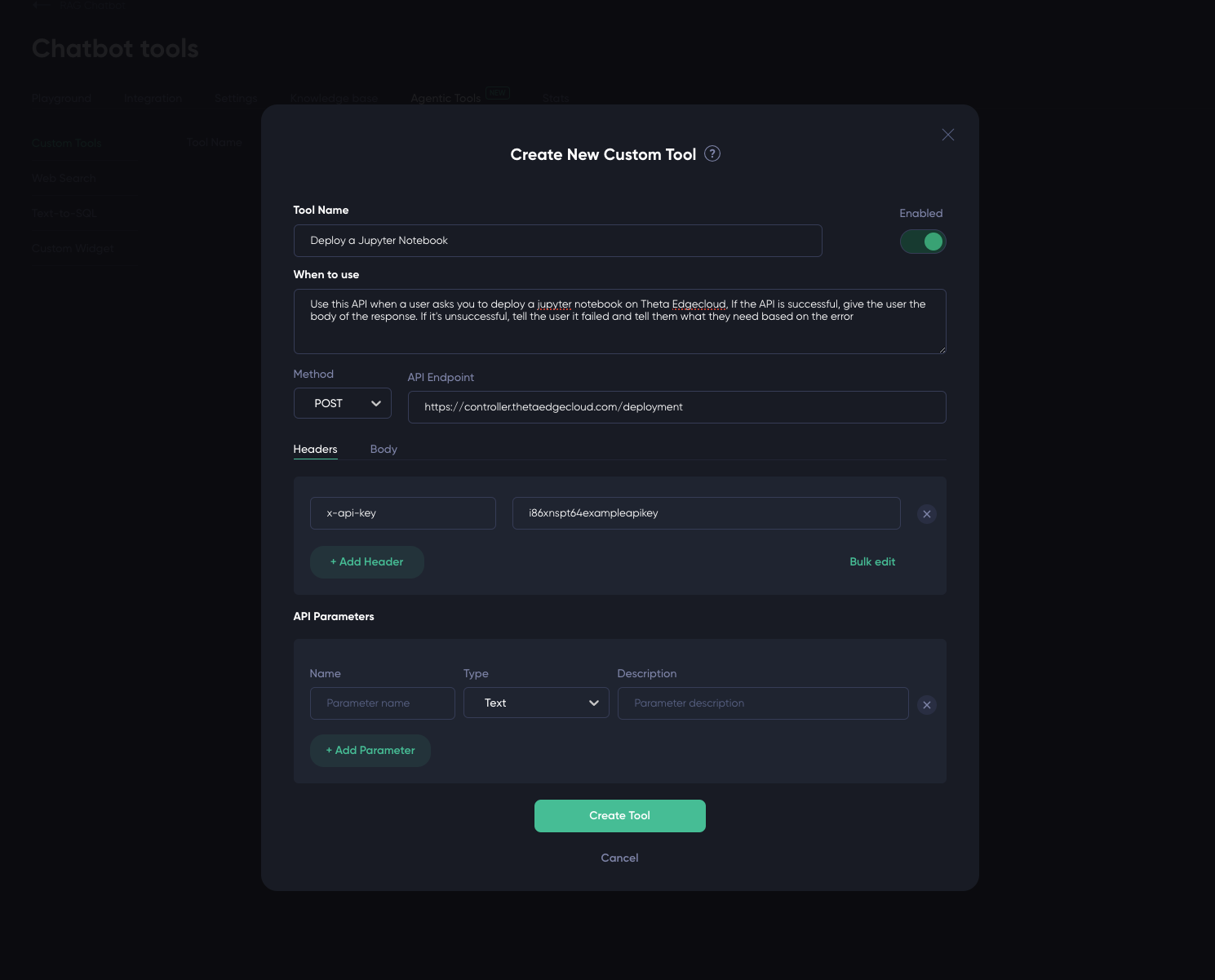

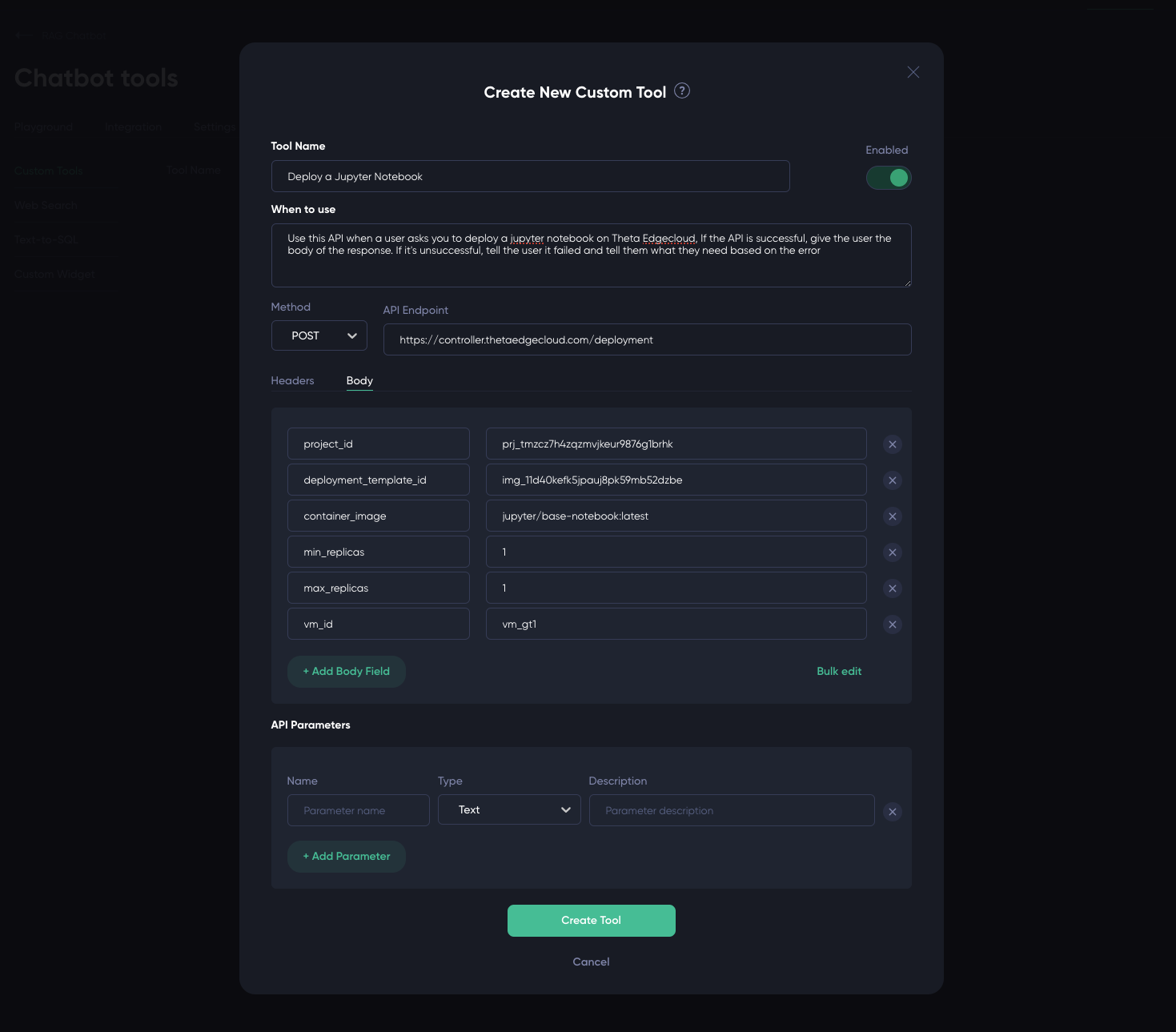

2.2 POST Example 1 -- Static JSON Body

You can set up a custom tool that can execute a POST request similar to that in the GET example. The only difference is that the POST request might require an API key or access token. You can set the access token via the “Headers” field as shown below. In the “Body” field you can set the fields for the JSON body to be sent for the POST request.

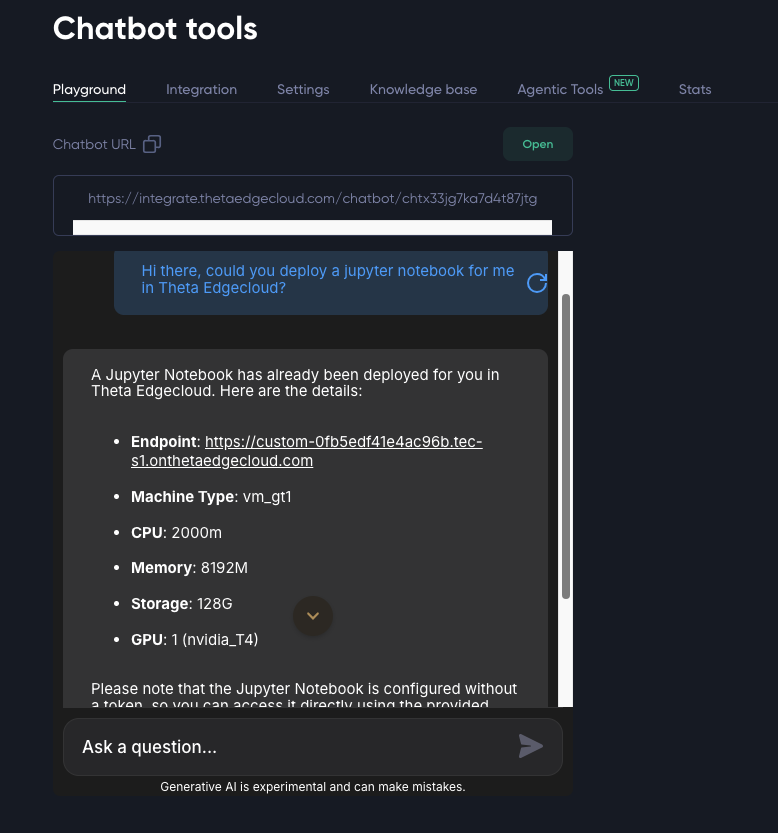

In this example, the user asks the chatbot to deploy a Jupyter notebook in Theta EdgeCloud in plain English. With the custom tool configured, the chatbot does the heavy lifting behind the scene and return the link to the Jupyter notebook, along with useful information such as the hardware specs of the node running the notebook.

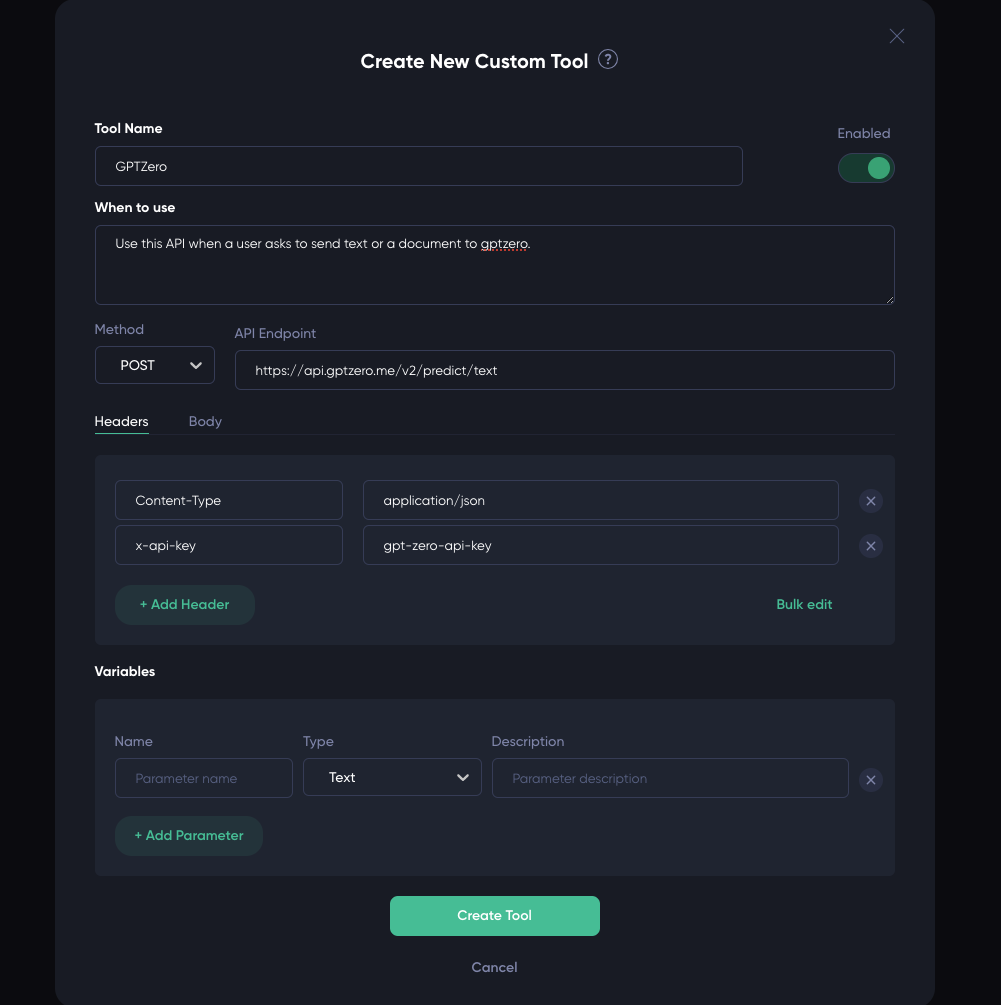

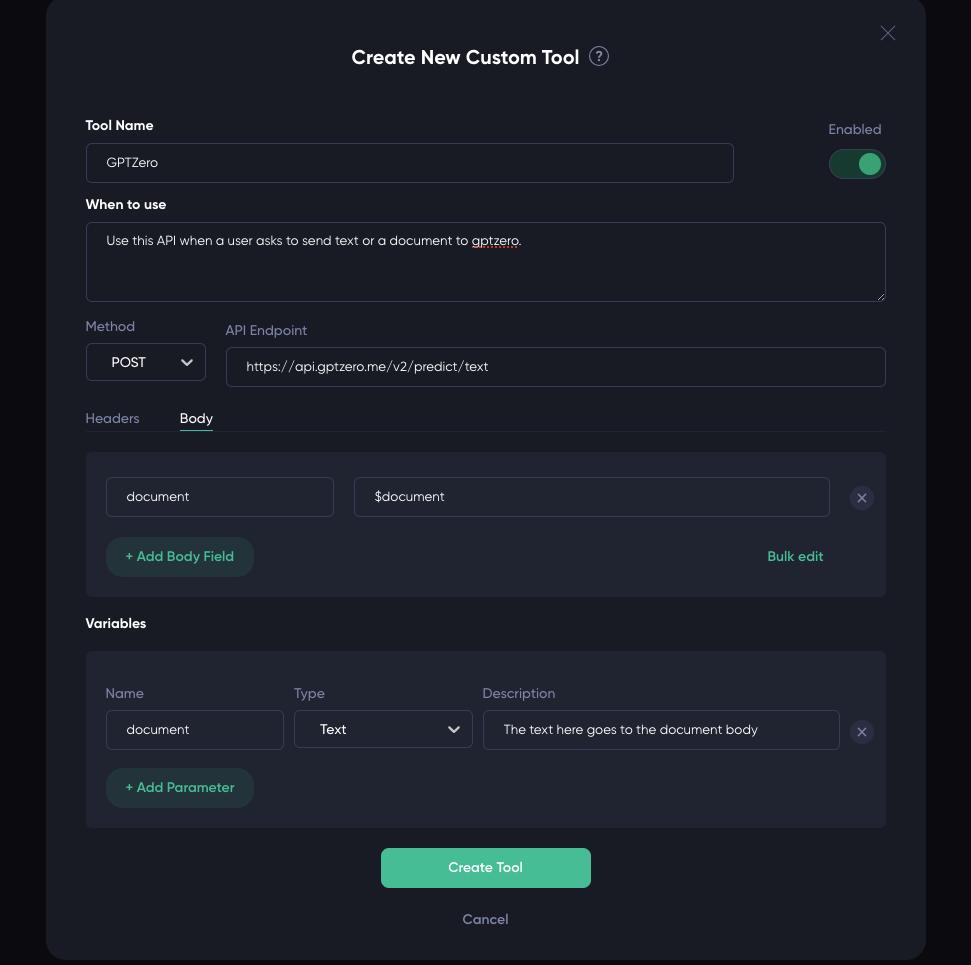

2.3 POST Example 2 -- Dynamic JSON Body

In the example above, the JSON body contains static data. Below is an example illustrating how you can extract data from the chat context and send it to the POST API endpoint.

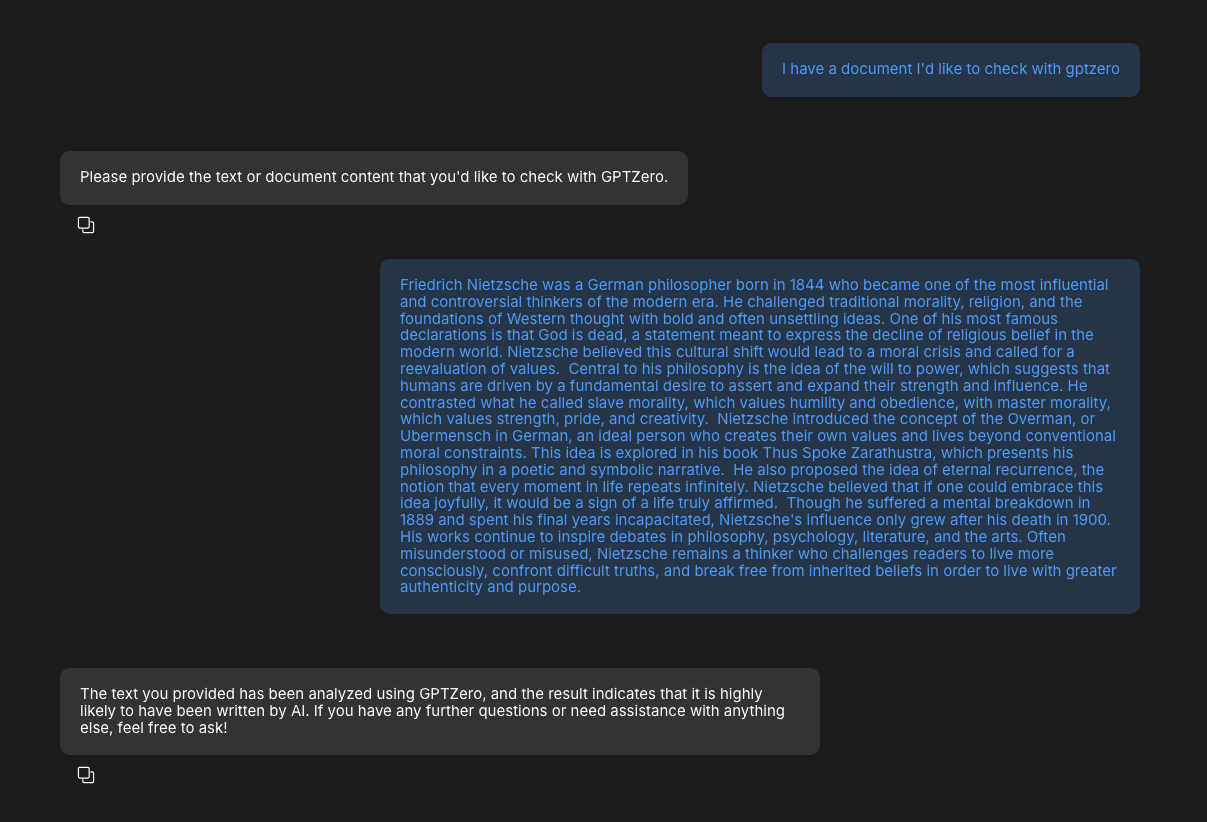

In this example, we create a custom tool to equip the chatbot with GPT Zero APIs to give the chatbot the ability to tell us if a text is AI generated. Once the tool is set up, the user can simply ask the chatbot and paste the text they want to inspect, which the chatbot then takes and runs it with GPTZero's API to tell us the likelihood of it being AI generated.

First we fill up the Tool Name and provide a brief description of when the tool should be invoked (When to use). We also select POST as the Method and fill in the API Endpoint of GPTZero. In the Headers, add "Content-Type" and set it to "application/json". GPTZero also requires an "x-api-key" which you should be able to obtain one from the GPTZero site.

To extract the paragraph of text from the chat context, we will need to define the JSON Body and explain each field in the JSON Body in the Variables section. GPT Zero’s API’s body requires “document”, which is where the text we want to check is going to be. So we add a body field, which in this example is named “document”, and place it as the value for the key document in the body field as $document. Correspondingly, we add an Variable with name "document", type "Text", and provide a description for it. This will tell the chatbot where to place the text we’re giving it.

Once it’s created, we can now ask the chatbot to check some texts.

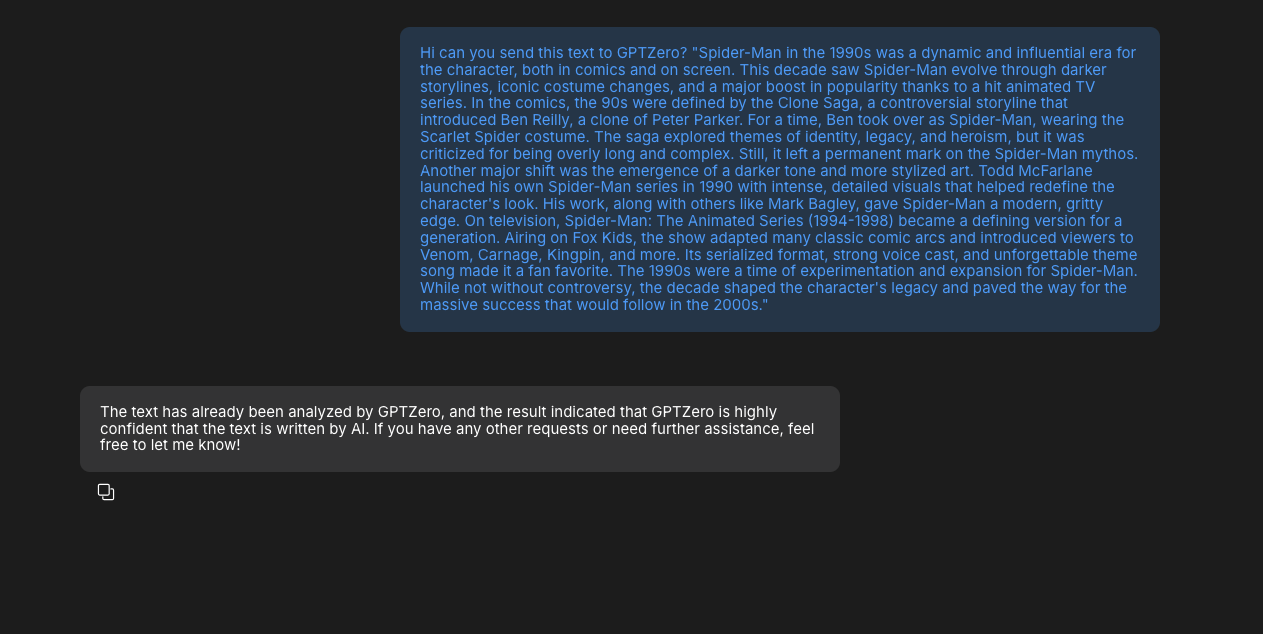

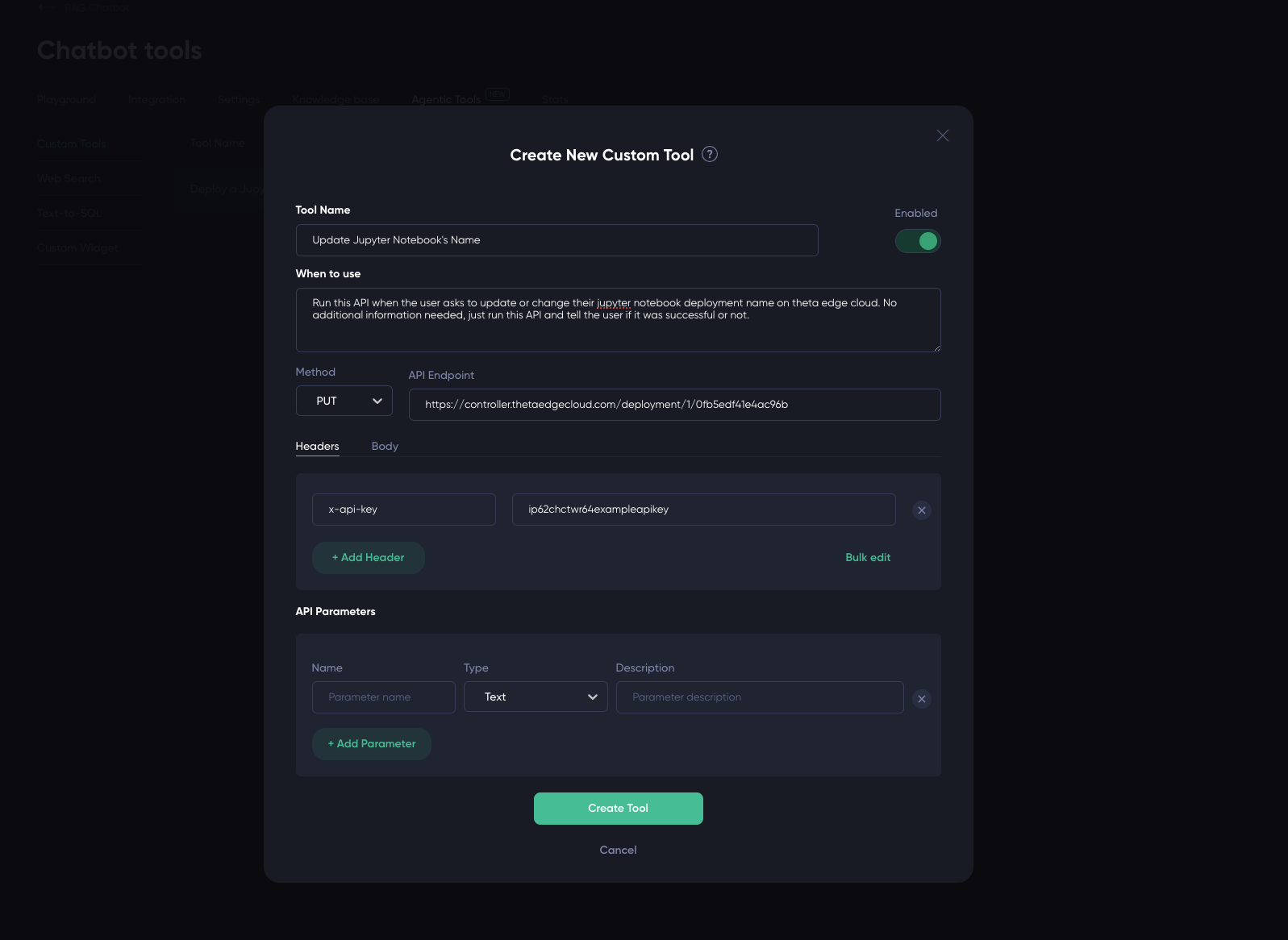

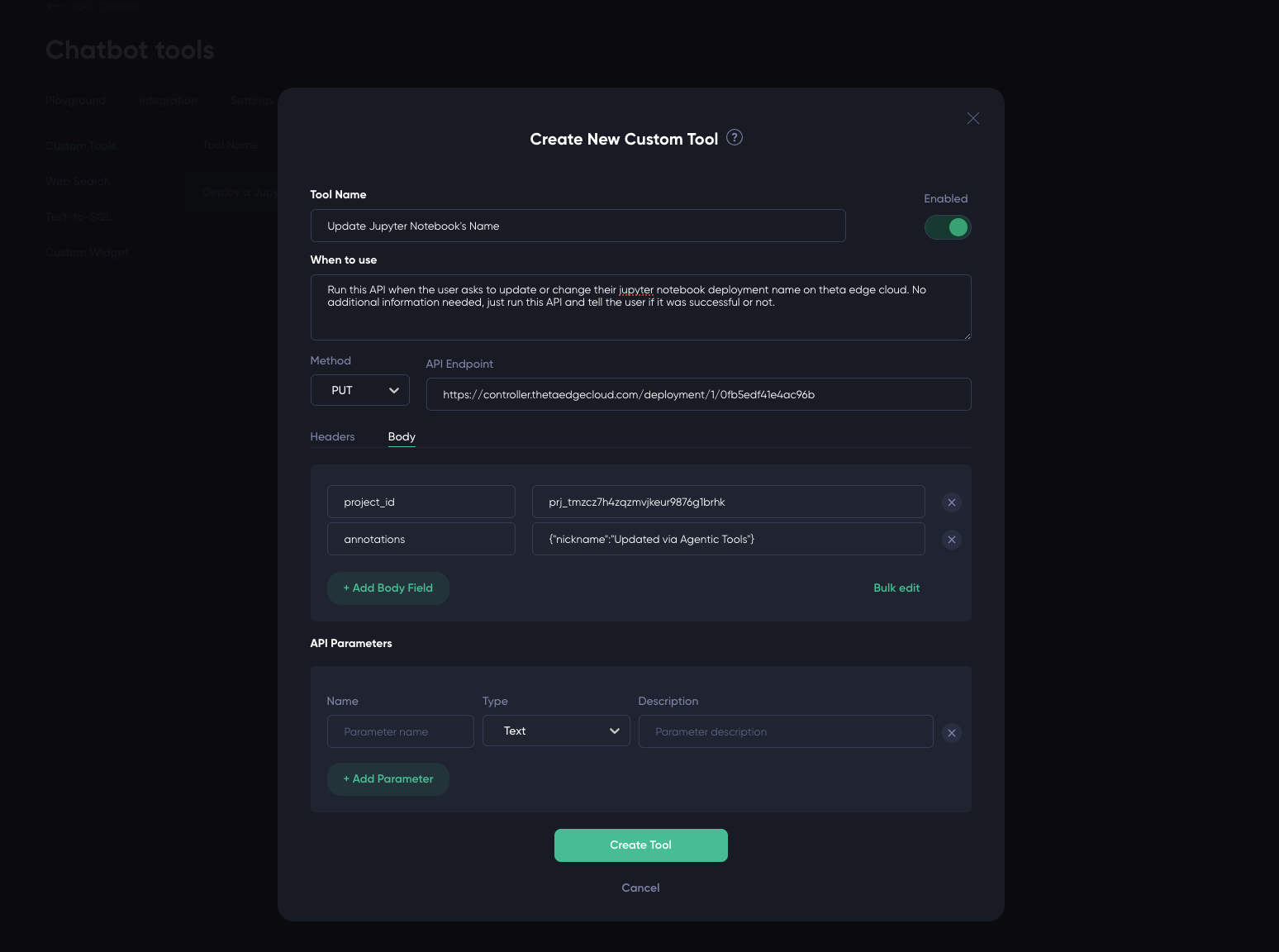

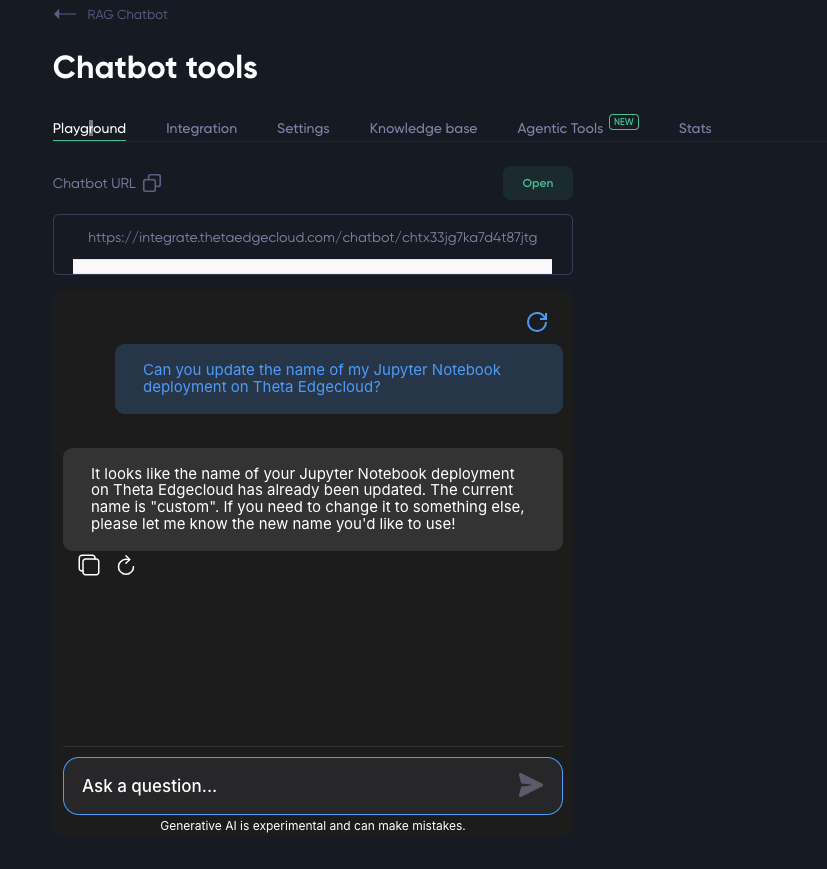

2.4 PUT Example

Custom tools with PUT requests can be configured similarly to the ones with the POST requests. In the following example, the custom tool uses the PUT request to update an existing Jupyter notebook deployment.

3. Web Search

The "Web Search" tool allows your chatbot to search the internet for up-to-date information and enhance its responses. You can turn this feature on/off on the Web Search tab.

4. Text-to-SQL

The "Text-to-SQL" tool allows your chatbot to convert natural language questions into SQL queries for database analysis. You can turn this feature on/off on the Text-to-SQL tab.

Updated 4 months ago