Community FAQ

Community FAQs for Theta EdgeCloud Hybrid Beta.

June 25, 2025 marks a major milestone for the Theta Network with the launch of the Theta EdgeCloud Hybrid Beta — a decentralized platform built to power high-performance computing at scale. With a hybrid architecture that leverages both traditional cloud GPUs and community-run edge nodes, EdgeCloud is designed to support AI inference, video processing, 3D rendering, and more.

Whether you’re contributing GPU power or submitting workloads to be completed on EdgeCloud, this FAQ will help you get started.

What Is Theta EdgeCloud?

Theta EdgeCloud is a decentralized GPU compute marketplace. It connects those who need access to GPU resources with those who have unused GPU capacity. Contributors can earn rewards in TFuel, while developers and organizations gain access to scalable, cost-efficient infrastructure.

For Node Operators: Contribute Your GPU and Earn TFuel

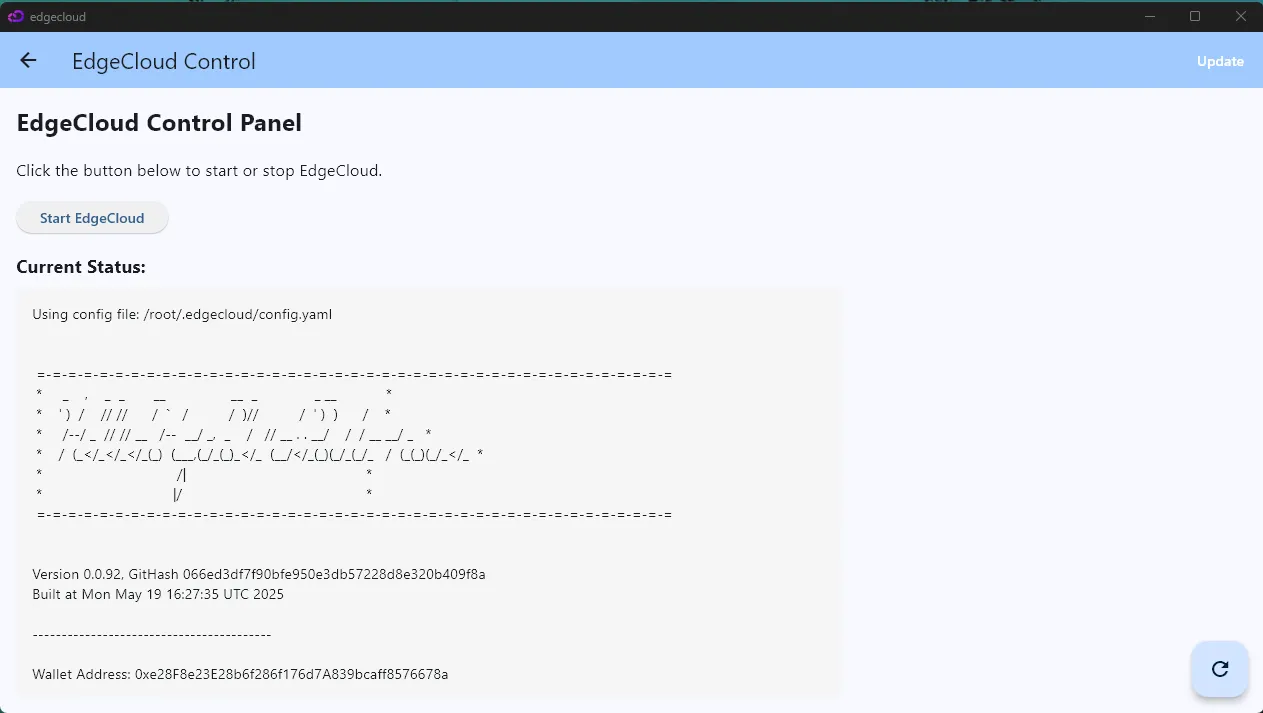

What is the EdgeCloud Client?

The EdgeCloud Client is a lightweight software package that lets you contribute your GPU power to Theta EdgeCloud. Once installed, your machine becomes part of a global infrastructure capable of running containerized jobs.

System Requirements

Recommended hardware:

- GPU: NVIDIA 3090, 4090, A100, H100 or similar (8GB vRAM minimum)

- CPU: Intel or AMD with 4 or more cores

- RAM: 16GB or more

- Disk: At least 256GB of free storage

- Internet: 100 Mbps upload and download

- OS: Ubuntu 22.04+ (recommended), Windows 10/11 (Alpha support)

Machines meeting the above specs will be much more likely to get high-reward jobs. Moreover, clients who are used to cloud services like AWS or GCP may come with high expectations, so it’s a good idea to aim for consistent uptime. When being used, the GPU will likely be running near full capacity, so make sure your internet connection, power supply, and cooling systems are in good shape.

How Are Rewards Calculated?

- Rewards are calculated in USD and converted to TFuel

- Payouts are made monthly between the 1st and 5th of each month

- Node operators can set their own hourly GPU rental rate

- The marketplace model allows demand-side users to select nodes based on price and performance

Getting Started

To install and run the EdgeCloud client, follow the official documentation:

- EdgeCloud Client Installation Guide

- Command-line (CLI) and RPC API references are also available for advanced users

For Job Submitters: Run High-Performance Workloads on EdgeCloud

What Types of Jobs Can I Run?

Theta EdgeCloud supports any containerized job requiring GPU acceleration, including:

- AI inference and generative AI models

- Text-to-image and chatbot applications

- Video encoding and transcoding

- 3D rendering pipelines

- Financial modeling and scientific simulations

Jobs are routed to the most optimal mix of edge and cloud GPUs for efficient and cost-effective processing.

Submitting a Job

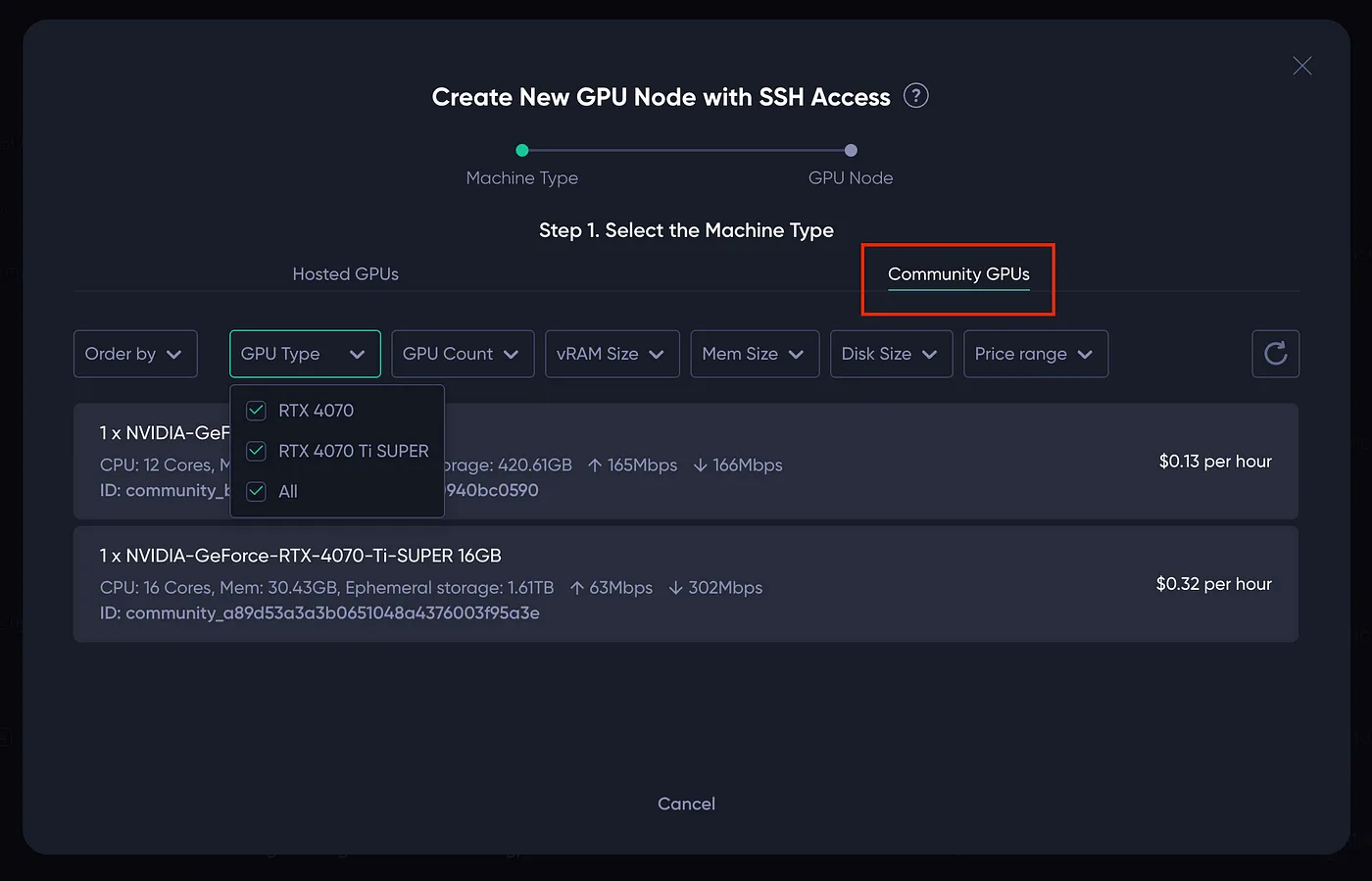

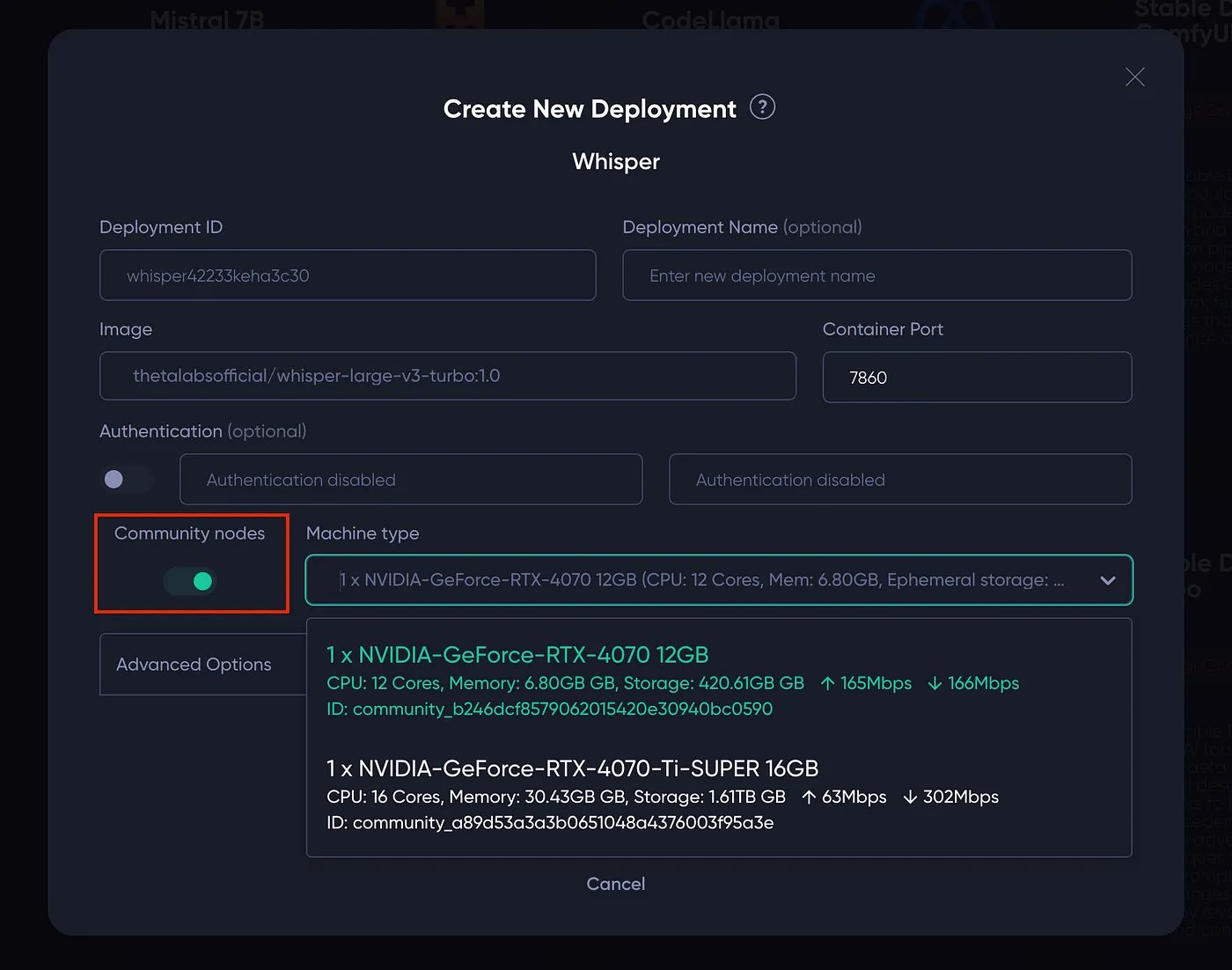

You can submit containerized jobs on Theta EdgeCloud to be completed on community-run GPUs using this guide. There are three initial ways to leverage community Edge Nodes:

-

Use Community Nodes as GPU Machines with SSH Access

-

Dedicated Model Deployment Onto Community Nodes

-

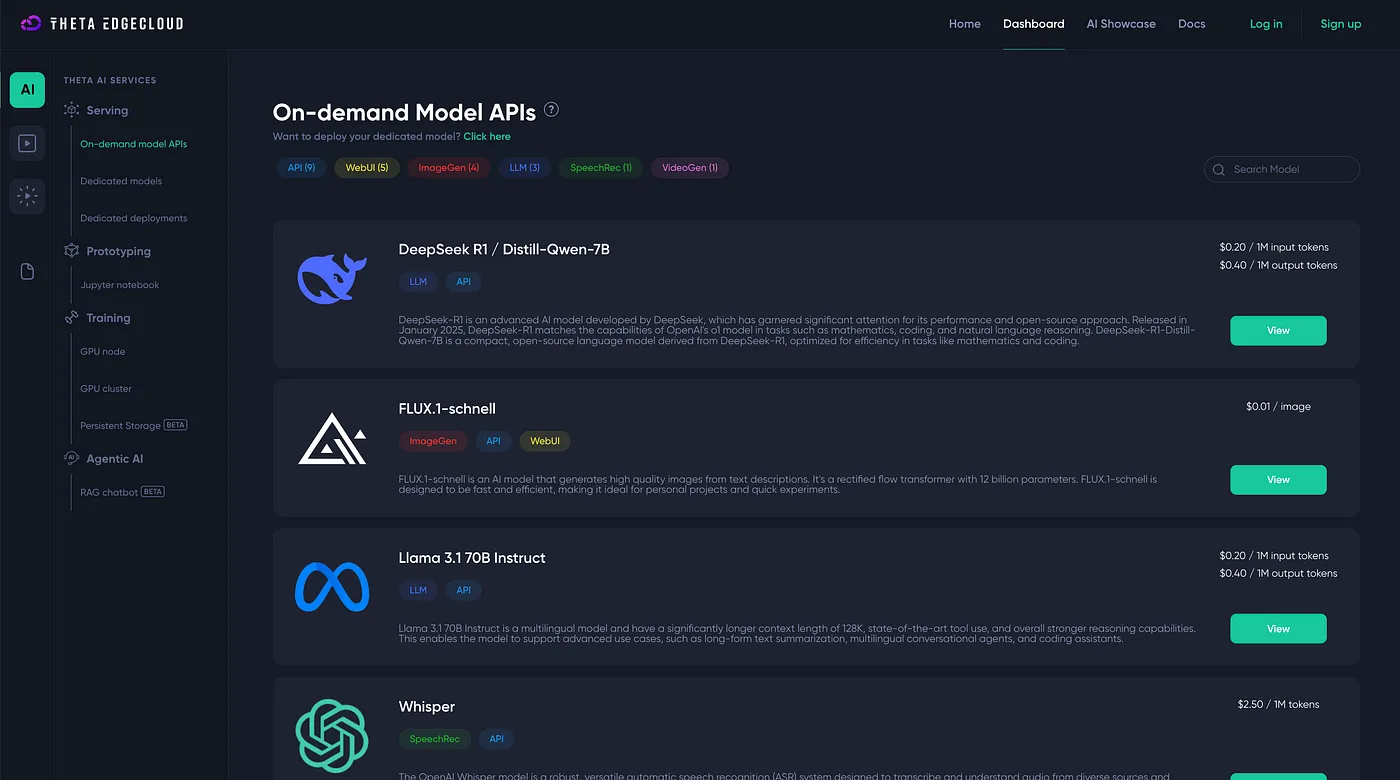

On-demand Model APIs Leveraging Community Nodes

Can I Select Specific GPUs?

Yes. Users can filter available nodes based on:

- GPU type (e.g., 4090 vs A100)

- Hourly rate

- Performance characteristics

This flexibility helps users balance cost and speed based on their project requirements.

What Happens If a Node Goes Offline?

EdgeCloud supports:

- Automatic failover

- Job containerization

- Intelligent reallocation of workloads

If a node goes offline mid-task, the system will reroute the job to another available GPU to ensure completion without manual intervention.

Developer Features in Beta

Available at launch:

- API access for job submission

- Intelligent job routing across edge and cloud

- Node performance monitoring

Coming soon:

- Job opt-out and preference settings for node operators

- Enhanced marketplace insights

Who Should Use Theta EdgeCloud?

Theta EdgeCloud is designed for:

- Developers building GenAI tools and AI agents

- Video professionals handling large-scale transcoding

- Researchers running scientific simulations

- GPU owners looking to earn passive income from idle hardware

Edgecloud Control Panel - Troubleshooting FAQ

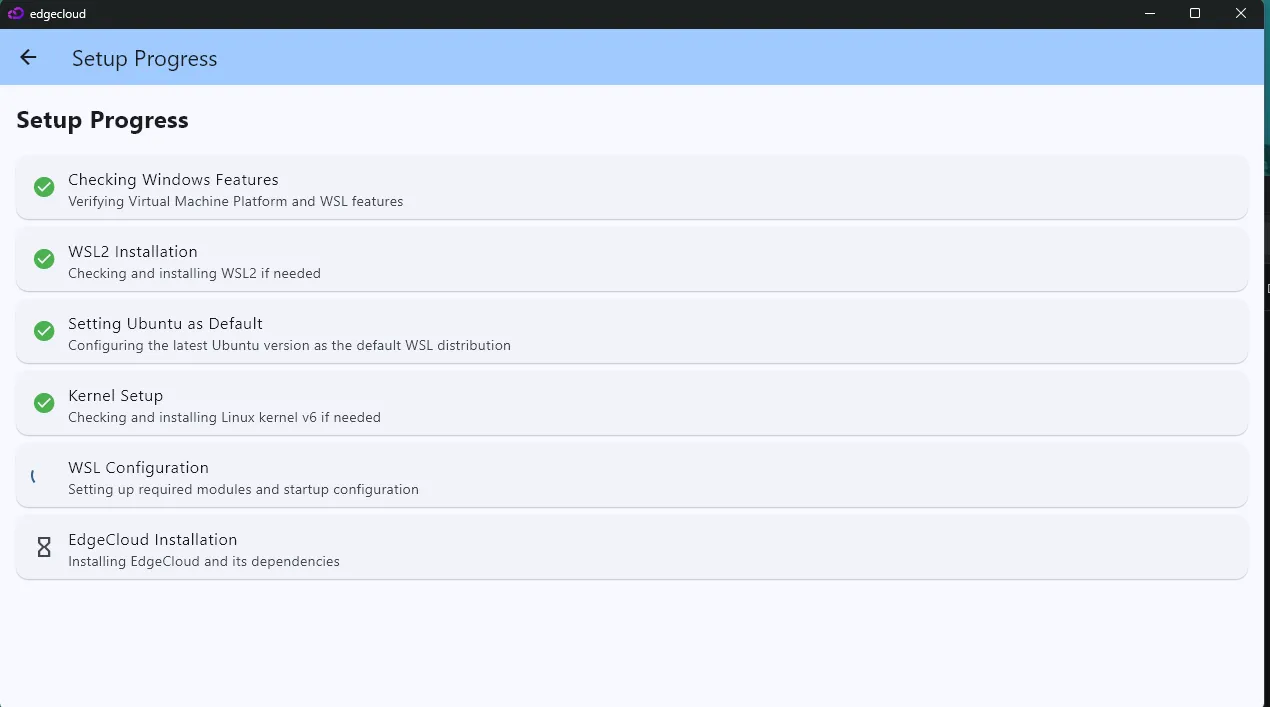

Many errors in the Edgecloud Control Panel trace back to your WSL (Windows Subsystem for Linux) environment — especially the Ubuntu version and Linux kernel. This FAQ helps you diagnose and fix these common problems.

Why am I seeing errors in the Edgecloud Control Panel?

Most errors indicate an issue with your WSL setup. The Edgecloud software depends on specific WSL configurations, including the Ubuntu version and Linux kernel. If these don’t meet requirements, the control panel may fail.

What Ubuntu version do I need for WSL?

- Minimum: Ubuntu 22.04 (Jammy Jellyfish)

- Recommended: Ubuntu 24.04 (Noble Numbat)

Using anything older than 22.04 often causes problems.

How can I see which Ubuntu distribution is installed in WSL?

Open PowerShell and run:

wsl --listLook for names like Ubuntu or Ubuntu-22.04.

How do I check the OS version of my Ubuntu in WSL?

Run in PowerShell:

wsl -u root cat /etc/os-releaseLook for VERSION_ID. It should be 22.04 or higher.

What Linux kernel version does WSL need?

You need kernel version 6.x or above. Check with:

wsl -u root uname -rIt should show something like 6.6.87.2-microsoft-standard-WSL2.

My kernel is still on 5.x. How do I update it?

- Open PowerShell as Administrator.

- Run:

Get-AppxPackage MicrosoftCorporationII.WindowsSubsystemforLinux -AllUsers | Remove-AppxPackage - Then update WSL:

wsl --update --web-download - Check again with:

wsl -u root uname -r

You should see a version starting with 6.

How do I remove an outdated Ubuntu WSL distribution?

- List your distros:

wsl --list - Unregister the old one:

wsl --unregister <distro_name>

Replace <distro_name> with the actual name, like Ubuntu or Ubuntu-20.04.

Note: After uninstalling a distro, you can go back to the setup process of the Edgecloud Windows application, which should guide you through installing the correct Ubuntu version.

Do I need to accept admin prompts during setup?

Yes. Always click “Yes” on administrator pop-ups during Edgecloud setup. These allow WSL and dependencies to install correctly.

If needed, you can run the Edgecloud app as administrator during the initial setup. Later, use it normally (non-admin).

I get an error saying: Failed to check/enable Windows features: Failed to check Windows features: Failed to run setup script. What does this mean?

This usually means WSL is not enabled in your Windows Features. To fix:

- Open PowerShell as Administrator and run:

dism.exe /online /enable-feature /featurename:Microsoft-Windows-Subsystem-Linux /all /norestart - Also enable the Virtual Machine Platform:

dism.exe /online /enable-feature /featurename:VirtualMachinePlatform /all /norestart - Restart your PC.

After this, try the Edgecloud setup again.

Updated 4 months ago